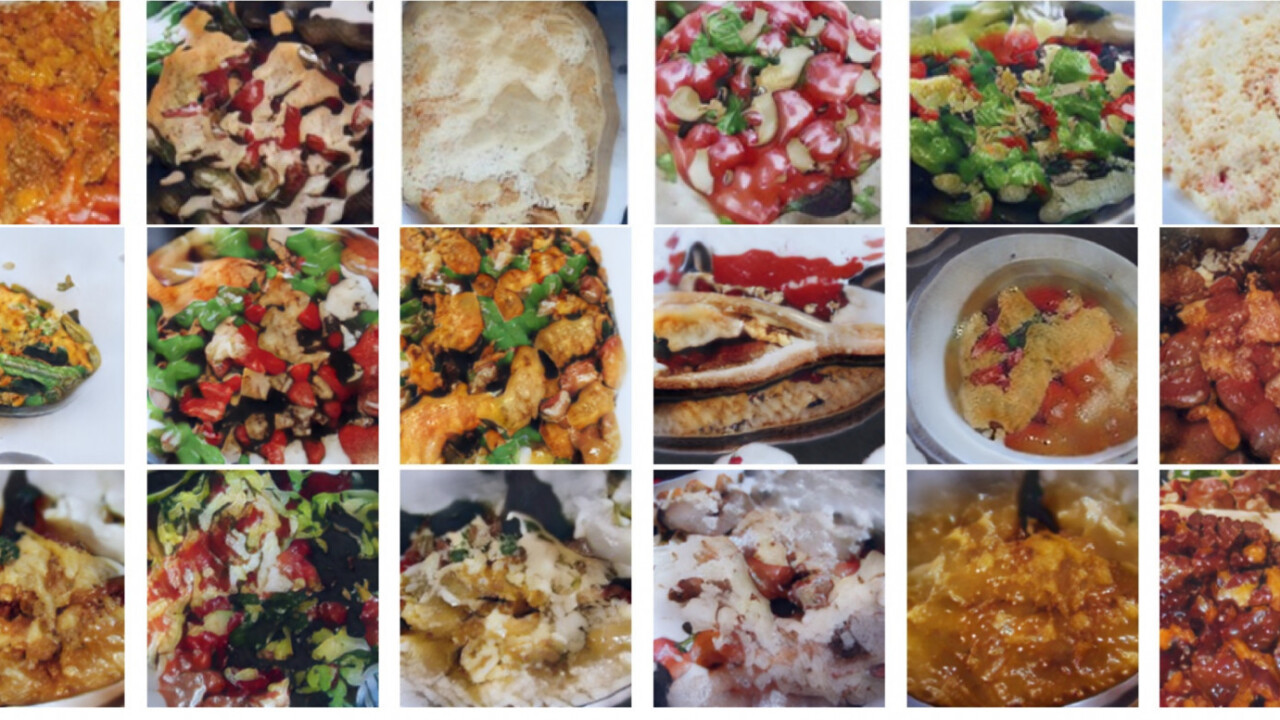

A team of researchers from Tel-Aviv University developed a neural network capable of reading a recipe and generating an image of what the finished, cooked product would look like. As if DeepFakes weren’t bad enough, now we can’t be sure the delicious food we see online is real.

The Tel-Aviv team, consisting of researchers Ori Bar El, Ori Licht, and Netanel Yosephian created their AI using a modified version of a generative adversarial network (GAN) called StackGAN V2 and 52K image/recipe combinations from the gigantic recipe1M dataset.

Basically, the team developed an AI that can take almost any list of ingredients and instructions, and figure out what the finished food product looks like.

Researcher Ori Bar El told TNW:

[It] all started when I asked my grandmother for a recipe of her legendary fish cutlets with tomato sauce. Due to her advanced age she didn’t remember the exact recipe. So, I was wondering if I can build a system that given a food image, can output the recipe. After thinking about this task for a while I concluded that it is too hard for a system to get an exact recipe with real quantities and with “hidden” ingredients such as salt, pepper, butter, flour etc.

Then, I wondered if I can do the opposite, instead. Namely, generating food images based on the recipes. We believe that this task is very challenging to be accomplished by humans, all the more so for computers. Since most of the current AI systems try replace human experts in tasks that are easy for humans, we thought that it would be interesting to solve a kind of task that is even beyond humans’ ability. As you can see, it can be done in a certain extent of success.

The researchers also acknowledge, in their white paper, that the system isn’t perfect quite yet:

It is worth mentioning that the quality of the images in the recipe1M dataset is low in comparison to the images in CUB and Oxford102 datasets. This is reflected by lots of blurred images with bad lighting conditions, ”porridge-like images” and the fact that the images are not square shaped (which makes it difficult to train the models). This fact might give an explanation to the fact that both models succeeded in generating ”porridge-like” food images (e.g. pasta, rice, soups, salad) but struggles to generate food images that have a distinctive shape (e.g. hamburger, chicken, drinks).

This is the only AI of its kind that we know of, so don’t expect this to be an app on your phone anytime soon. But, the writing is on the wall. And, if it’s a recipe, the Tel-Aviv team’s AI can turn it into an image that looks good enough that, according to the research paper, humans sometimes prefer it over a photo of the real thing.

What do you think?

The team intends to continue developing the system, hopefully extending into domains beyond food. Ori Bar El told us:

We plan to extend the work by training our system on the rest of the recipes (we have about 350k more images), but the problem is that the current dataset is of low quality. We have not found any other available dataset suitable for our needs, but we might build a dataset on our own that contains children’s books text and corresponding images.

These talented researchers may have damned foodies on Instagram to a world where we can’t quite be sure whether what we’re drooling over is real, or some robot’s vision of a souffle`.

It’s probably a good time for us all to go out into the real world and stick our faces in some actual food. You know, the kind created by scientists and prepared by robots.

Get the TNW newsletter

Get the most important tech news in your inbox each week.