Many technology professionals bristle at the word ethics as an intangible topic more likely to show up as a required training course than part of daily risk mitigation.

But when called to defend how you are mitigating the brand and PR risk of a Tay Chatbot or 787 Max autopilot, you are likely to “find religion” in the form of ethics. Autonomous and intelligent systems (A/IS) deployment is accelerating, and managing the risks will be fundamentally different from previous technology waves.

We used to build confidence in technology via testing and certification. But if you had the ability to ask Tay, the chatbot would describe exhaustive testing as impossible as there is no longer control over the inputs reaching your system or algorithms. In an A/IS world, there are an infinite number of test cases, many of which can be simulated but not all tested. In order to be convincing to your customers, CEO, board, employees, and social responsibility leaders, testing and an ethical framework will be required.

What makes A/IS different?

Technology adoption in business is routine. But A/IS is fundamentally different from prior generations of technology.

Risk is shifting from humans to machines

- Traditionally controls have been over humans who operate technology, and the inputs they create; but more technology is operating algorithmically and autonomously.

- Even those developing algorithms cannot fully predict how they will operate “in the wild.”

- For A/IS there are situations with no humans in the loop.

- Insuring against A/IS risks is fundamentally different than evaluating human competence and capability.

- Current laws identify an individual person as responsible party; the accountability of A/IS is not always obvious to insurers or regulators.

Businesses adoption of A/IS is accelerating

- Massive cost reductions realized through A/IS.

- Those paying $3,000/hr for a manned helicopter for inspections can get the same results with a drone for less than $100/hr.

- Algorithmic trading in the securities industry identifies nuanced arbitrage opportunities measured in the hundreds of millions of dollars.

- Chatbots dramatically reduce the cost of human customer service.

- Physical instantiations of A/IS (e.g. drones, autopilot systems) with notable negative consequences (e.g. 787 Max) will drive a public outcry for better risk management and insurance.

Insurance as a proxy for regulation

- Technology advancement massively outpaces regulation.

- Insurance is an obvious requirement to promote the Autonomy Economy.

- New insurance underwriting techniques are required to measure and price A/IS risk.

- An ethical framework around your A/IS is becoming an insurance requirement.

- Manufacturers of A/IS systems currently self-insuring will suffer as incidents and claims make self-insurance fiscally unsound.

Data is the difference

- Traditionally technology risk was mitigated thorough testing and certifications.

- With A/IS and learning systems, no set of test data or test cases can predict every eventuality. And since data is the variable, redundancy no longer mitigates the risk.

- Since we cannot authoritatively know an A/IS is operating safely, emphasis shifts to graceful shutdown and the ability to “fail safely” when confronted with edge cases, situations an AI system has not been trained to handle.

So what can be done?

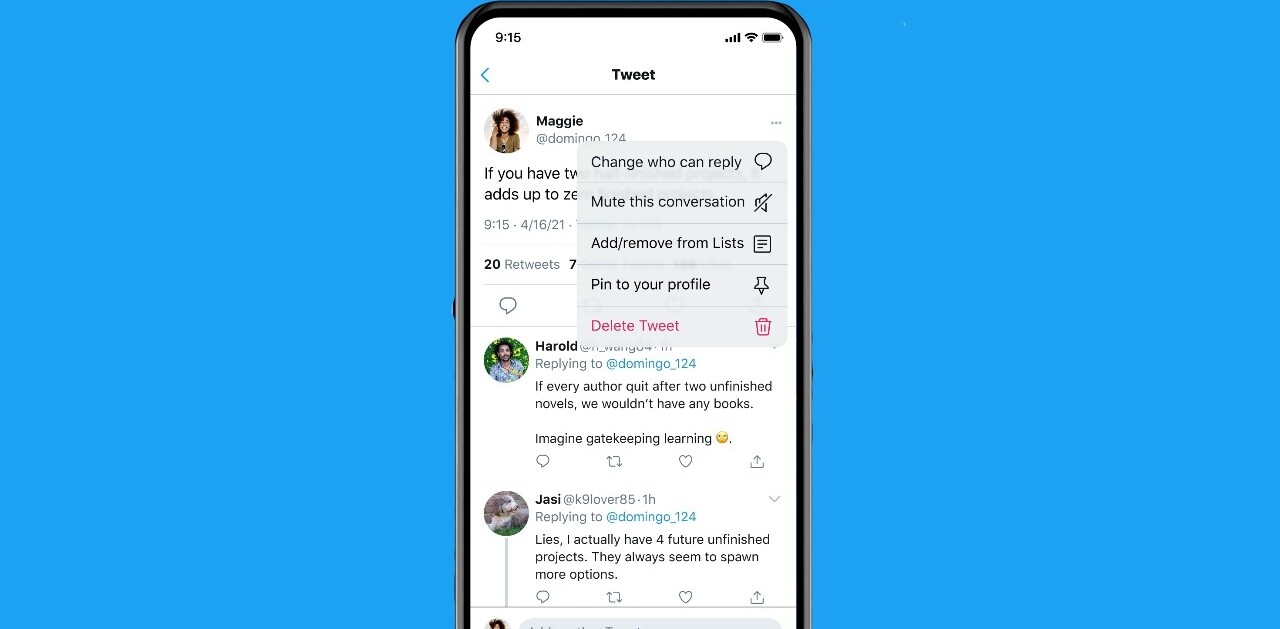

While ethical frameworks can seem daunting, there are simple ways to begin. The graphic below describes how an ethical framework can be part of the technology narrative.

Ethical framework starter kit

To be a leader in managing A/IS risk, a company must imbue ethics throughout development, testing, and operational processes. There are many emerging standards under development from the IEEE and other trusted organizations in this area.

A/IS has no human operator to “hit the kill switch” when something strange happens. An ethical framework must incorporate a “supervisory” function outside of the A/IS. Constantly monitoring for inappropriate behavior to “hit the kill switch” and initiate a graceful shutdown or fail safe routine.

While difficult to evaluate consistency across laws, regulations, and social norms, a starting point is Rules of Reasonableness.

Examples may include:

- A chatbot with excessive use of expletives or other key words violating social norms would be operating outside intended ethical boundaries.

- A securities trading algorithm conducting a material percentage of broad market trades would reasonably be evaluated to be operating outside its boundaries.

- A drone or aircraft whose angle of attack sensor indicates a >45 degree nose down angle where the autopilot recommends continued nose down action; the system is acting outside intended boundaries.

So building simple ethical frameworks with supervisory functions to evaluate A/IS behavior is not only the right thing to do, but will be a requirement for insurance coverage and a prerequisite of your risk management team to deploy new technology.

Get the TNW newsletter

Get the most important tech news in your inbox each week.