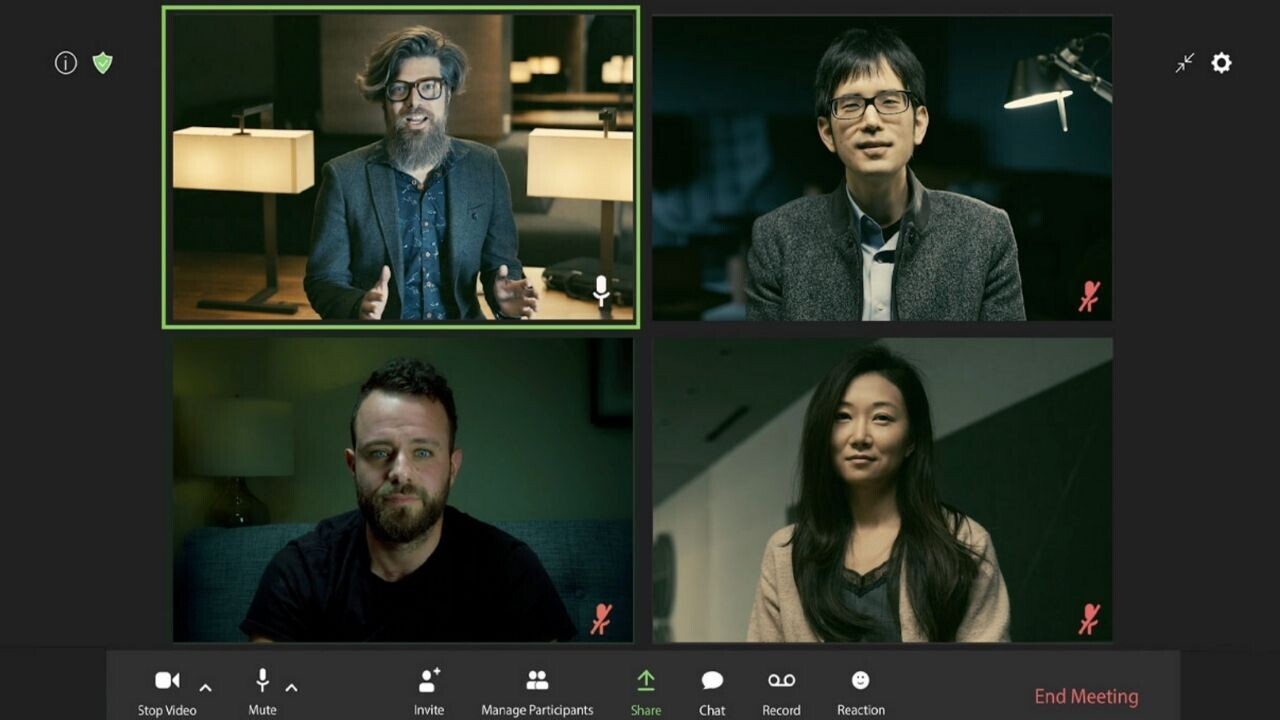

Nvidia has unveiled an AI model that converts a single 2D image of a person into a “talking head” video.

Known as Vid2Vid Cameo, the deep learning model is designed to improve the experience of videoconferencing.

If you’re running late for a call, you could roll out of bed in your pajamas and disheveled hair, upload a photo of you dressed to impress, and the AI will map your facial movements to the reference image — leaving the other attendees unaware of the chaos behind the camera. That could be a boon for the chronically unkempt, but you should probably test the technique before you turn up in your birthday suit.

The system can also adjust your talking head’s viewpoint to show you looking straight at the screen, when secretly your eyes are fixed on a TV in the background.

[Read: ]

They sound like nifty features for those of us who dread video calls, but the most useful aspect of the model may be bandwidth reduction. Nvidia says the technique can cut the bandwidth needed for video conferences by up to 10x.

GANimated video

Vid2Vid Cameo is powered by generative adversarial networks (GANs), which produce the videos by pitting two neural networks against each other: a generator that tries to create realistic-looking samples, and a discriminator that attempts to work out whether they’re real or fake.

This enables the two networks to synthesize realistic videos from a single image of the user, which could be a real photo or a cartoon avatar. During the video call, the model will capture their real-time motion and apply it to the uploaded image.

The model was trained on a dataset of 180,000 talking-head videos, which taught the network to identify 20 key points that encode the location of features including the mouth, eyes, and nose.

These points are then extracted from the image uploaded by the user to generate a video that mimics their appearance.

The model will be available soon in the Nvidia Maxine SDK and Nvidia Video Code SDK, but you can already try a demo of it here. I gave it a go, and was pretty impressed by the system’s efforts — although I wouldn’t let it cut my hair.

You can read a research paper on the technique here.

Greetings Humanoids! Did you know we have a newsletter all about AI? You can subscribe to it right here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.