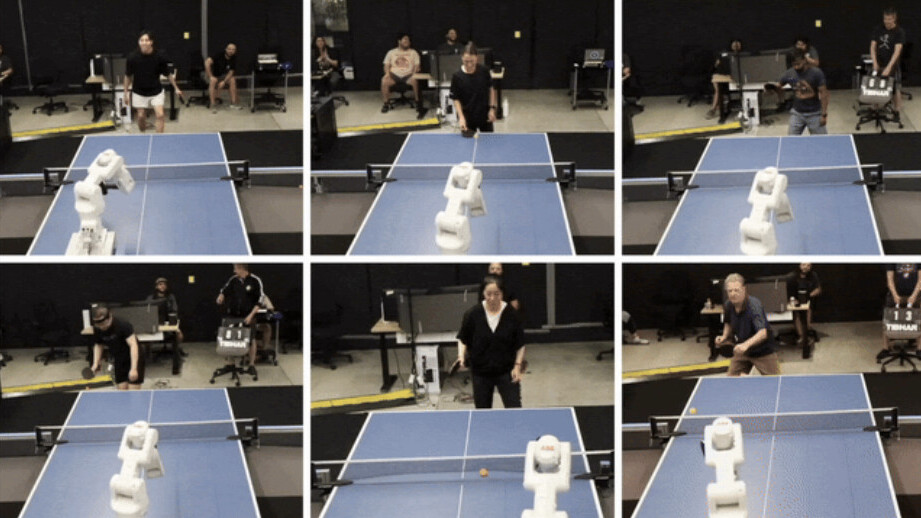

China is currently busy accumulating most of the gold medals in the table tennis events in the Paris Olympics. Meanwhile, an AI-powered robot from Google DeepMind has achieved “amateur human level performance” in the sport.

In a study published in an Arxiv paper this week, the Google artificial intelligence subsidiary outlined how the robot functions, along with footage of it taking on what we can only assume were willing enthusiastic ping pong players of varying skill.

According to DeepMind, the racket-wielding robot had to be good at low-level skills, like returning the ball, as well as more complex tasks, like long-term planning and strategising. It also played against opponents with diverse styles, drawing on vast amounts of data to refine and adapt its approach.

Not Olympic level quite yet

The robotic arm — and its 3D-printed racket — won 13 out of 29 games against human opponents with different levels of skill in the game. It won 100% of matches against “beginner” and 55% against “intermediate” players. However, it lost every single time that it faced an “advanced” opponent.

DeepMind said the results of the recent project constitute a step towards the goal of achieving human-level speed and performance on real world tasks, a “north star” for the robotics community.

In order to achieve them, its researchers say they made use of four applications that could also make the findings useful beyond hitting a small ball over a tiny net, difficult though it may be:

- A hierarchical and modular policy architecture

- Techniques to enable zero-shot sim-to-real including an iterative approach to defining the training task distribution grounded in the real-world

- Real-time adaptation to unseen opponents

- A user-study to test the model playing actual matches against unseen humans in physical environments

The company further added that its approach had led to ”competitive play at human level and a robot agent that humans actually enjoy playing with.” Indeed, its non-robot competitors in the demonstration videos do seem to be enjoying themselves.

Table tennis robotics

Google DeepMind is not the only robotics company to choose table tennis to train their systems. The sport requires hand-eye coordination, strategic thinking, speed, and adaptability, among other things, making it well suited to train and test these skills in AI-powered robots.

The world’s “first robot table tennis tutor” was acknowledged by Guinness World Records in 2017. The rather imposing machine was developed by Japanese electronics company OMRON. Its latest iteration is the FORPHEUS (stands for “Future OMRON Robotics technology for Exploring Possibility of Harmonized aUtomation with Sinic theoretics,” and is also inspired by the ancient mythological figure Orpheus…).

OMRON says it “embodies the relationship that will exist between humans and technology in the future.”

Google DeepMind makes no such existential claims for its recent ping pong champion, but the findings from its development may still prove profound for our robot friends down the line. We do however feel that DeepMind’s robotic arm is severely lacking in the abbreviation department.

Get the TNW newsletter

Get the most important tech news in your inbox each week.