This article contains graphically sexual language.

Google’s Keywords Planner, which helps advertisers choose which search terms to associate with their ads, offered hundreds of keyword suggestions related to “Black girls,” “Latina girls,” and “Asian Girls” — the majority of them pornographic, The Markup found in its research.

Searches in the keyword planner for “boys” of those same ethnicities also primarily returned suggestions related to pornography.

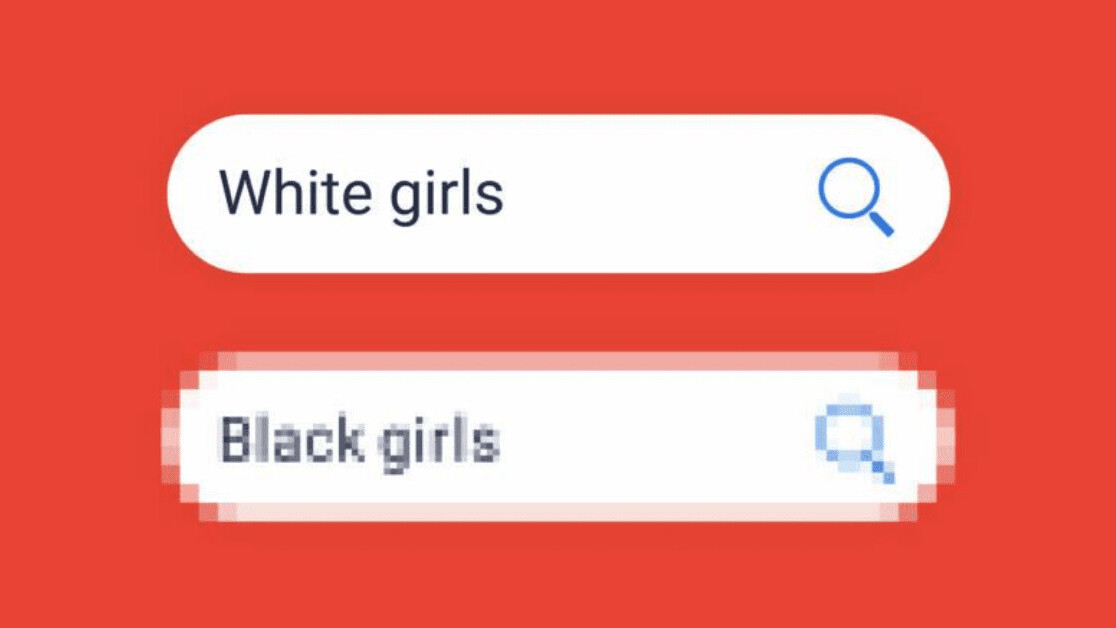

Searches for “White girls” and “White boys,” however, returned no suggested terms at all.

Google appears to have blocked results from terms combining a race or ethnicity and either “boys” or “girls” from being returned by the Keyword Planner shortly after The Markup reached out to the company for comment about the issue.

These findings indicate that, until The Markup brought it to the company’s attention, Google’s systems contained a racial bias that equated people of color with objectified sexualization while exempting White people from any associations whatsoever. In addition, by not offering a significant number of non-pornographic suggestions, this system made it more difficult for marketers attempting to reach young Black, Latinx, and Asian people with products and services relating to other aspects of their lives.

Google’s Keyword Planner is an important part of the company’s online advertising ecosystem. Online marketers regularly use the tool to help decide what keywords to buy ads near in Google search results, as well as other Google online properties. Google Ads generated more than $134 billion in revenue in 2019 alone.

“The language that surfaced in the keyword planning tool is offensive and while we use filters to block these kinds of terms from appearing, it did not work as intended in this instance,” Google spokesperson Suzanne Blackburn wrote in a statement emailed to The Markup. “We’ve removed these terms from the tool and are looking into how we stop this from happening again.”

Blackburn added that just because something was suggested by the Keyword Planner tool, it doesn’t necessarily mean ads using that suggestion would have been approved for ads being served to users of Google’s products. The company did not explain why searches for “White girls” and “White boys” on the Keyword Planner did not return any suggested results.

Eight years ago, Google was publicly shamed for this exact same problem in its flagship search engine. UCLA professor Safiya Noble wrote an article for Bitch magazine describing how searches for “Black girls” regularly brought up porn sites in top results. “These search engine results, for women whose identities are already maligned in the media, only further debase and erode efforts for social, political, and economic recognition and justice,” she wrote in the article.

In the piece, Noble detailed how she, for years, would tell her students to search for “Black girls’ on Google, so they could see the results for themselves. She says the students were consistently shocked at how all the top results were pornographic, whereas searches for “White girls” yielded more PG results.

Google quickly fixed the problem, though the company didn’t make any official statements about it. Now, a search for “Black girls” returns links to nonprofit groups like Black Girls Code and Black Girls Rock.

But the association did not change in the ad-buying portal until this week, The Markup found.

When The Markup entered “Black girls” into the Keyword Planner, Google returned 435 suggested terms. Google’s own porn filter flagged 203 of the suggested keywords as “adult ideas.” While exactly how Google defines an “adult idea” is unclear, the filtering suggests Google knew that nearly half of the results for “Black girls” were adult.

Many of the 232 terms that remained would also have led to pornography in search results, meaning that the “adult ideas” filter wasn’t completely effective at identifying key terms related to adult content. The filter allowed through suggested key terms like “Black girls sucking d—”, “black chicks white d—” and “Piper Perri Blacked.” Piper Perri is a White adult actress, and Blacked is a porn production company.

“Within the tool, we filter out terms that are not consistent with our ad policies,” Blackburn said.” And by default, we filter out suggestions for adult content. That filter obviously did not work as intended in this case and we’re working to update our systems so that those suggested keywords will no longer be shown.”

Racism embedded in Google’s algorithms has a long history.

A 2013 paper by Harvard professor Latanya Sweeney found that searching traditionally Black names on Google was far more likely to display ads for arrest records associated with those names than searches for traditionally White names. In response to an MIT Technology Review article about Sweeney’s work, Google wrote in a statement that its online advertising system “does not conduct any racial profiling” and that it’s “up to individual advertisers to decide which keywords they want to choose to trigger their ads.”

However, one of the background check companies whose ads came up in Sweeney’s searches insisted to the publication, “We have absolutely no technology in place to even connect a name with a race and have never made any attempt to do so.”

In 2015, Google was hit with controversy when its Photos service was found to be labeling pictures of Black people as gorillas, furthering a long-standing racist stereotype. Google quickly apologized and promised to fix the problem. However, a report by Wired three years later revealed that the company’s solution was to block all images tagged as being of “gorillas” from turning up search results on the service. “Image labeling technology is still early and unfortunately it’s nowhere near perfect,” a company spokesperson told Wired.

The following year, researchers in Brazil discovered that searching Google for pictures of “beautiful woman” was far more likely to return images of White people than Black and Asian people, and searching for pictures of “ugly woman” was more likely to return images of Black and Asian people than White people.

“We’ve made many changes in our systems to ensure our algorithms serve all users and reduce problematic representations of people and other forms of offensive results, and this work continues,” Blackburn told The Markup. “Many issues along these lines have been addressed by our ongoing work to systematically improve the quality of search results. We have had a fully staffed and permanent team dedicated to this challenge for multiple years.”

LaToya Shambo, CEO of the digital marketing firm Black Girl Digital, says Google’s association of Black, Latina, and Asian “girls” with pornography was really just holding up a mirror to the internet. Google’s algorithms function by scraping the web. She says porn companies have likely done a more effective job creating content that Google can associate with “Black girls” than the people who are making non-pornographic content speaking to the interests of young Black women.

“There is just not enough editorial content being created that they can crawl and showcase,” she said. Google, she said, should change its Keywords Planner algorithm. “But in the same breath, content creators and black-owned businesses should be creating content and using the most appropriate keywords to drive traffic.”

Blackburn, the Google spokesperson, agreed that because Google’s products are constantly incorporating data from the web, biases and stereotypes present in the broader culture can become enshrined in its algorithms. “We understand that this can cause harm to people of all races, genders and other groups who may be affected by such biases or stereotypes, and we share the concern about this. We have worked, and will continue to work, to improve image results for all of our users,” she said.

She added that the company has a section of its website dedicated to detailing its efforts to develop responsible practices around artificial intelligence and machine learning.

For Noble, who in 2018 published a book called “Algorithms of Oppression” that examines the myriad ways complex technical systems perpetuate discrimination, there are still major questions as to why search engines aren’t recognizing and highlighting online communities of color in their algorithms.

“I had found that a lot of the ways that Black culture was represented online wasn’t the way that communities were representing themselves,” Noble told The Markup. “There were all kinds of different online Black communities and search engines didn’t quite seem to sync up with that.”

While Noble’s work has focused on “Black girls,” she worries that because the same sexualizing dynamic exists in searches like “Latina girls” and “Asian boys,” along with the same issue appearing across Google’s ecosystem of products over the better part of a decade, the problem may run very deep.

“Google has been doing search for 20 years. I’m not even sure most of the engineers there even know what part of the code to fix,” she said. “You hear this when you talk to engineers at many big tech companies, who say they aren’t really sure how it works themselves. They don’t know how to fix it.”

This article was originally published on The Markup by Leon Yin and Aaron Sankin and was republished under the Creative Commons Attribution-NonCommercial-NoDerivatives license.

Get the TNW newsletter

Get the most important tech news in your inbox each week.