As countries continue to grapple with the rise of online extremism, Australia is taking its first step by cutting off access to any internet domain that hosts terrorist material during a crisis event.

In addition to temporary bans, the government is reportedly considering legislation to force digital platforms to improve the safety of their services.

Popular social platforms — Facebook, YouTube, Amazon, Microsoft, and Twitter — have been given given a deadline till the end of next month to share enforcement details to the government.

Taking down ‘abhorrent’ content

“We are doing everything we can to deny terrorists the opportunity to glorify their crimes,” said Australian Prime Minister Scott Morrison, according to Reuters.

Officials are said to be working on a framework to determine what kinds of “abhorrent” violent material — including murder, sexual assault, torture, and kidnapping — would be removed on a case-by-case basis. The decisions would be taken by Australia’s eSafety Commissioner, the department overseeing online safety.

Furthermore, the government intends to establish a 24/7 Crisis Coordination Centre to watch out for extremist content in online discourse. But there appears to be no clarity yet on what would be the outcome if platforms hosting such material don’t comply.

Other enactment concerns remain: would an entire website, say YouTube, be taken down if an offending video is found? Or would it just be the link to the post or video? How long before the ban is lifted? What if citizens use VPN services to circumvent the country-wide blockade? Would they be banned too?

The plan is well-intentioned no doubt, but it will boil down to the nuts and bolts of how it’s implemented in practice.

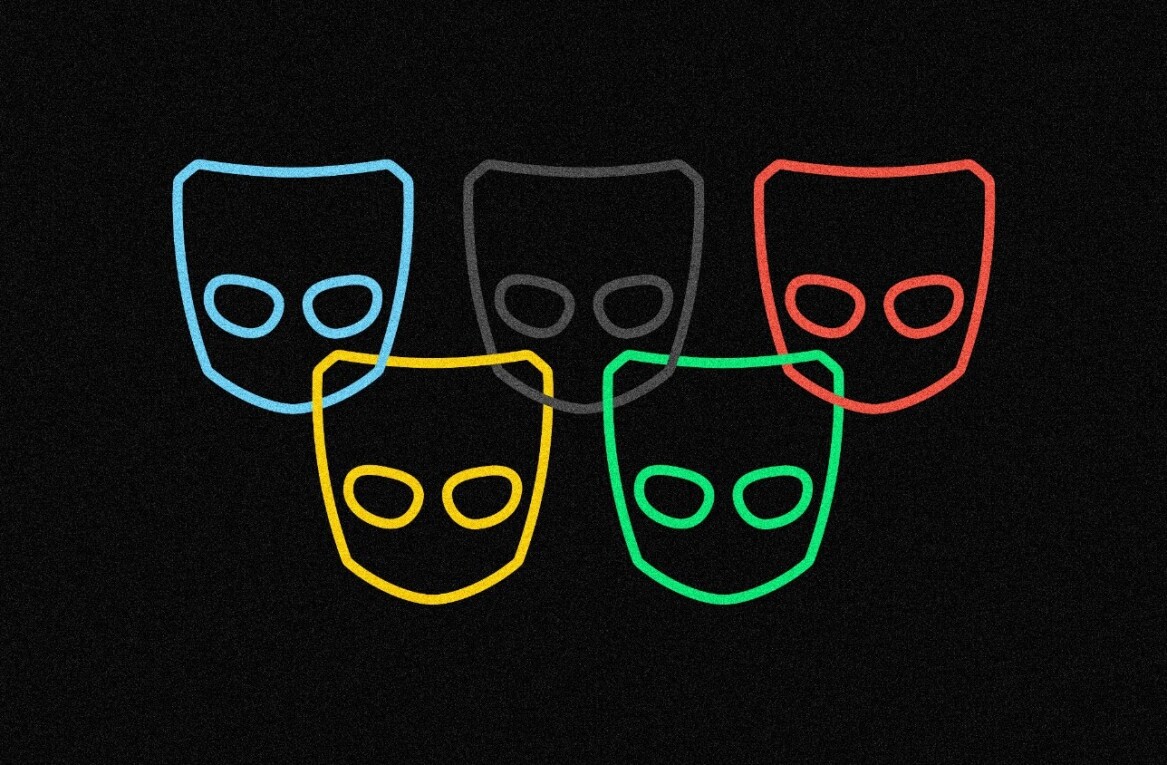

Social media as potent weapons of extremism

The move comes in the wake of Christchurch massacre in March where 51 people were killed at two mosques in New Zealand. The gunman live-streamed the first attack on Facebook, which then went viral on other social media platforms, forcing the companies to take down the content.

8chan has also been a safe harbor for terrorists to post their manifestos in three different mass shooting incidents this year — counting Christchurch — resulting in the alt-right message board being taken off the clearnet (aka the ‘normal’ internet). However, it continues to be available via its “.onion” address.

The lapses in platform moderation and general misuse have prompted a closer scrunity of social media sites. Facebook, not long ago, agreed to hand over identifying information on French users suspected of using hate speech on its platform.

Germany already has a law in place — called Network Enforcement Act — under which social networks have 24 hours to remove hate speech, fake news, and other illegal material after they are notified about it — or face fines up to €50 million.

Taking it a step further, the EU Parliament’s Committee on Civil Liberties, Justice, and Home Affairs (LIBE) voted in favor of implementing a one-hour deadline for any platform to delete terrorist content.

Ultimately, this is symptomatic of a wider problem ailing social media. The public feeds built to connect people have turned out to be potent weapons engineered to spread extremist content just as effectively as the truth, if not more.

The virality is a product of algorithmic disruption that, while filling their coffers quarter after quarter, have largely blinded them to the consequences. No wonder it has now emerged as the biggest source of headaches for the companies running them.

Get the TNW newsletter

Get the most important tech news in your inbox each week.