“I’m extremely confident that level 5 [self-driving cars] or essentially complete autonomy will happen, and I think it will happen very quickly,” Tesla CEO Elon Musk said in a video message to the World Artificial Intelligence Conference in Shanghai earlier this month. “I remain confident that we will have the basic functionality for level 5 autonomy complete this year.”

Musk’s remarks triggered much discussion in the media about whether we are close to having full self-driving cars on our roads. Like many other software engineers, I don’t think we’ll be seeing driverless cars (I mean cars that don’t have human drivers) any time soon, let alone the end of this year.

[Read: Rallycross is going electric in 2021 with a chaotic new race series across Europe]

I wrote a column about this on PCMag, and received a lot of feedback (both positive and negative). So I decided to write a more technical and detailed version of my views about the state of self-driving cars. I will explain why, in its current state, deep learning, the technology used in Tesla’s Autopilot, won’t be able to solve the challenges of level 5 autonomous driving. I will also discuss the pathways that I think will lead to the deployment of driverless cars on roads.

Level 5 self-driving cars

This is how the U.S. National Highway Traffic Safety Administration defines level 5 self-driving cars: “The vehicle can do all the driving in all circumstances, [and] the human occupants are just passengers and need never be involved in driving.”

Basically, a fully autonomous car doesn’t even need a steering wheel and a driver’s seat. The passengers should be able to spend their time in the car doing more productive work.

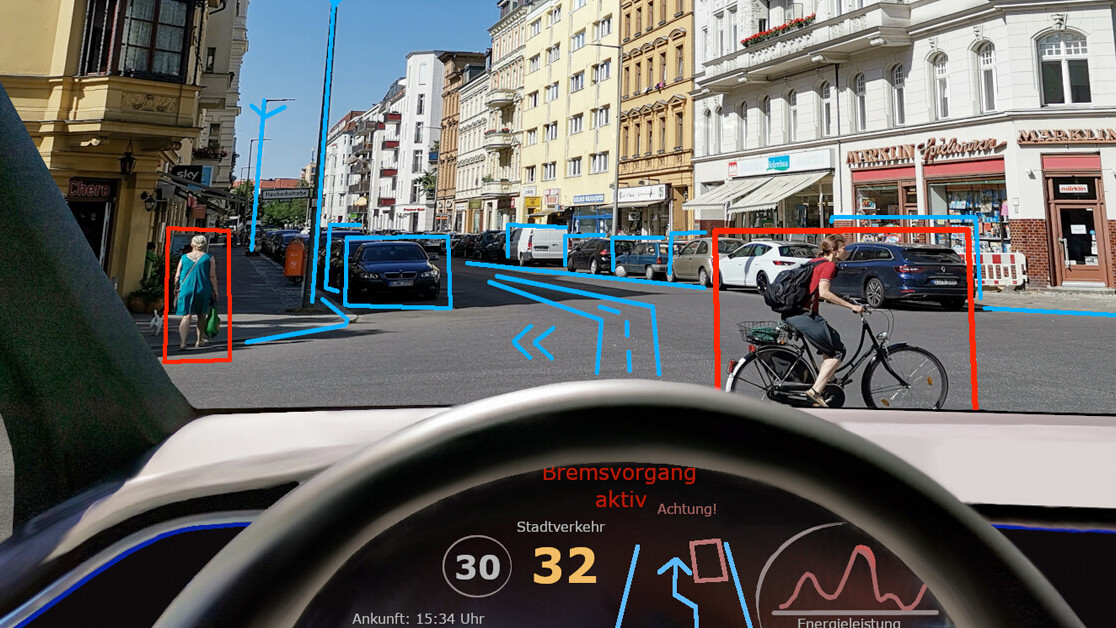

Current self-driving technology stands at level 2, or partial automation. Tesla’s Autopilot can perform some functions such as acceleration, steering, and braking under specific conditions. And drivers must always maintain control of the car and keep their hands on the steering wheel when Autopilot is on.

Other companies that are testing self-driving technology still have drivers behind the wheel to jump in when the AI makes mistakes (as well as for legal reasons).

The hardware and software of self-driving cars

Another important point Musk raised in his remarks is that he believes Tesla cars will achieve level 5 autonomy “simply by making software improvements.”

Other self-driving car companies, including Waymo and Uber, use lidars, hardware that projects laser to create three-dimensional maps of the car’s surroundings. Tesla, on the other hand, relies mainly on cameras powered by computer vision software to navigate roads and streets. Tesla use deep neural networks to detect roads, cars, objects, and people in video feeds from eight cameras installed around the vehicle. (Tesla also has a front-facing radar and ultrasonic object detectors, but those have mostly minor roles.)

There’s a logic to Tesla’s computer vision–only approach: We humans, too, mostly rely on our vision system to drive. We don’t have 3D mapping hardware wired to our brains to detect objects and avoid collisions.

But here’s where things fall apart. Current neural networks can at best replicate a rough imitation of the human vision system. Deep learning has distinct limits that prevent it from making sense of the world in the way humans do. Neural networks require huge amounts of training data to work reliably, and they don’t have the flexibility of humans when facing a novel situation not included in their training data.

This is something Musk tacitly acknowledged at in his remarks. “[Tesla Autopilot] does not work quite as well in China as it does in the U.S. because most of our engineering is in the U.S.” This is where most of the training data for Tesla’s computer vision algorithms come from.

Deep learning’s long-tail problem

Human drivers also need to adapt themselves to new settings and environments, such as a new city or town, or a weather condition they haven’t experienced before (snow- or ice-covered roads, dirt tracks, heavy mist). However, we use intuitive physics, commonsense, and our knowledge of how the world works to make rational decisions when we deal with new situations.

We understand causality and can determine which events cause others. We also understand the goals and intents of other rational actors in our environments and reliably predict what their next move might be. For instance, if it’s the first time that you see an unattended toddler on the sidewalk, you automatically know that you have pay extra attention and be careful. And what if you meet a stray elephant in the street for the first time? Do you need previous training examples to know that you should probably make a detour?

But for the time being, deep learning algorithms don’t have such capabilities, therefore they need to be pre-trained for every possible situation they encounter.

There’s already a body of evidence that shows Tesla’s deep learning algorithms are not very good at dealing with unexpected scenery even in the environments that they are adapted to. In 2016, a Tesla crashed into a tractor-trailer truck because its AI algorithm failed to detect the vehicle against the brightly lit sky. In another incident, a Tesla self-drove into a concrete barrier, killing the driver. And there have been several incidents of Tesla vehicles on Autopilot crashing into parked fire trucks and overturned vehicles. In all cases, the neural network was seeing a scene that was not included in its training data or was too different from what it had been trained on.

Tesla is constantly updating its deep learning models to deal with “edge cases,” as these new situations are called. But the problem is, we don’t know how many of these edge cases exist. They’re virtually limitless, which is what it is often referred to as the “long tail” of problems deep learning must solve.

Musk also pointed this out in his remarks to the Shanghai AI conference: “I think there are no fundamental challenges remaining for level 5 autonomy. There are many small problems, and then there’s the challenge of solving all those small problems and then putting the whole system together, and just keep addressing the long tail of problems.”

I think key here is the fact that Musk believes “there are no fundamental challenges.” This implies that the current AI technology just needs to be trained on more and more examples and perhaps receive minor architectural updates. He also said that it’s not a problem that can be simulated in virtual environments.

“You need a kind of real world situation. Nothing is more complex and weird than the real world,” Musk said. “Any simulation we create is necessarily a subset of the complexity of the real world.”

If there’s one company that can solve the self-driving problem through data from the real world, it’s probably Tesla. The company has a very comprehensive data collection program—better than any other car manufacturer doing self-driving software of software company working on self-driving cars. It is constantly gathering fresh data from the hundreds of thousands of cars it has sold across the world and using them to fine-tune its algorithms.

But will more data solve the problem?

Interpolation vs extrapolation

The AI community is divided on how to solve the “long tail” problem. One view, mostly endorsed by deep learning researchers, is that bigger and more complex neural networks trained on larger data sets will eventually achieve human-level performance on cognitive tasks. The main argument here is that the history of artificial intelligence has shown that solutions that can scale with advances in computing hardware and availability of more data are better positioned to solve the problems of the future.

This is a view that supports Musk’s approach to solving self-driving cars through incremental improvements to Tesla’s deep learning algorithms. Another argument that supports the big data approach is the “direct-fit” perspective. Some neuroscientists believe that the human brain is a direct-fit machine, which means it fills the space between the data points it has previously seen. The key here is to find the right distribution of data that can cover a vast area of the problem space.

If these premises are correct, Tesla will eventually achieve full autonomy simply by collecting more and more data from its cars. But it must still figure out how to use its vast store of data efficiently.

On the opposite side are those who believe that deep learning is fundamentally flawed because it can only interpolate. Deep neural networks extract patterns from data, but they don’t develop causal models of their environment. This is why they need to be precisely trained on the different nuances of the problem they want to solve. No matter how much data you train a deep learning algorithm on, you won’t be able to trust it, because there will always be many novel situations where it will fail dangerously.

The human mind on the other hand, extracts high-level rules, symbols, and abstractions from each environment, and uses them to extrapolate to new settings and scenarios without the need for explicit training.

I personally stand with the latter view. I think without some sort of abstraction and symbol manipulation, deep learning algorithms won’t be able to reach human-level driving capabilities.

There are many efforts to improve deep learning systems. One example is hybrid artificial intelligence, which combines neural networks and symbolic AI to give deep learning the capability to deal with abstractions.

Another notable area of research is “system 2 deep learning.” This approach, endorsed by deep learning pioneer Yoshua Bengio, uses a pure neural network-based approach to give symbol-manipulation capabilities to deep learning. Yann LeCun, a longtime colleague of Bengio, is working on “self-supervised learning,” deep learning systems that, like children, can learn by exploring the world by themselves and without requiring a lot of help and instructions from humans. And Geoffrey Hinton, a mentor to both Bengio and LeCun, is working on “capsule networks,” another neural network architecture that can create a quasi-three-dimensional representation of the world by observing pixels.

These are all promising directions that will hopefully integrate much-needed commonsense, causality, and intuitive physics into deep learning algorithms. But they are still in the early research phase and are not nearly ready to be deployed in self-driving cars and other AI applications. So I suppose they will be ruled out for Musk’s “end of 2020” timeframe.

Comparing human and AI drivers

One of the arguments I hear a lot is that human drivers make a lot of mistakes too. Humans get tired, distracted, reckless, drunk, and they cause more accidents than self-driving cars. The first part of human error is true. But I’m not so sure whether comparing accident frequency between human drivers and AI is correct. I believe the sample size and data distribution does not paint an accurate picture yet.

But more importantly, I think comparing numbers is misleading at this point. What is more important is the fundamental difference between how humans and AI perceive the world.

Our eyes receive a lot of information, but our visual cortex is sensitive to specific things, such as movement, shapes, specific colors, and textures. Through billions of years of evolution, our vision has been honed to fulfill different goals that are crucial to our survival, such as spotting food and avoiding danger.

But perhaps more importantly, our cars, roads, sidewalks, road signs, and buildings have evolved to accommodate our own visual preferences. Think about the color and shape of stop signs, lane dividers, flashers, etc. We have made all these choices—consciously or not—based on the general preferences and sensibilities of the human vision system.

Therefore, while we make a lot of mistakes, our mistakes are less weird and more predictable than the AI algorithms that power self-driving cars. Case in point: No human driver in their sane mind would drive straight into an overturned car or a parked firetruck.

In his remarks, Musk said, “The thing to appreciate about level five autonomy is what level of safety is acceptable for public streets relative to human safety? So is it enough to be twice as safe as humans. I do not think regulators will accept equivalent safety to humans. So the question is will it be twice as safe, five times as safe, 10 times as safe?”

But I think it’s not enough for a deep learning algorithm to produce results that are on par with or even better than the average human. It is also important that the process it goes through to reach those results reflect that of the human mind, especially if it is being used on a road that has been made for human drivers.

Other problems that need to be solved

Given the differences between human and cop, we either have to wait for AI algorithms that exactly replicate the human vision system (which I think is unlikely any time soon), or we can take other pathways to make sure current AI algorithms and hardware can work reliably.

One such pathway is to change roads and infrastructure to accommodate the hardware and software present in cars. For instance, we can embed smart sensors in roads, lane dividers, cars, road signs, bridges, buildings, and objects. This will allow all these objects to identify each other and communicate through radio signals. Computer vision will still play an important role in autonomous driving, but it will be complementary to all the other smart technology that is present in the car and its environment. This is a scenario that is becoming increasingly possible as 5G networks are slowly becoming a reality and the price of smart sensors and internet connectivity decreases.

Just as our roads evolved with the transition from horses and carts to automobiles, they will probably go through more technological changes with the coming of software-powered and self-driving cars. But such changes require time and huge investments from governments, vehicle manufacturers, and well as the manufacturers of all those other objects that will be sharing roads with self-driving cars. And we’re still exploring the privacy and security threats of putting an internet-connected chip in everything.

An intermediate scenario is the “geofenced” approach. Self-driving technology will only be allowed to operate in areas where its functionality has been fully tested and approved, where there’s smart infrastructure, and where the regulations have been tailored for autonomous vehicles (e.g., pedestrians are not allowed on roads, human drivers are limited, etc.). Some experts describe these approaches as “moving the goalposts” or redefining the problem, which is partly correct. But given the current state of deep learning, the prospect of an overnight rollout of self-driving technology is not very promising. Such measures could help a smooth and gradual transition to autonomous vehicles as the technology improves, the infrastructure evolves, and regulations adapt.

There are also legal hurdles. We have clear rules and regulations that determine who is responsible when human-driven cars cause accidents. But self-driving cars are still in a gray area. For now, drivers are responsible for their Tesla’s actions, even when it is in Autopilot mode. But in a level 5 autonomous vehicle, there’s no driver to blame for accidents. And I don’t think any car manufacturer would be willing to roll out fully autonomous vehicles if they would to be held accountable for every accident caused by their cars.

Many loopholes for the 2020 deadline

All this said, I believe Musk’s comments contain many loopholes in case he doesn’t make Tesla fully autonomous by the end of 2020.

First, he said, “We’re very close to level five autonomy.” Which is true. In many engineering problems, especially in the field of artificial intelligence, it’s the last mile that takes a long time to solve. So, we are very close to reaching full self-driving cars, but it’s not clear when we’ll finally close the gap.

Musk also said Tesla will have the basic functionality for Level 5 autonomy completed this year. It’s not clear if basic means “complete and ready to deploy.”

And he didn’t promise that if Teslas become fully autonomous by the end of the year, governments and regulators will allow them on their roads.

Musk is a genius and an accomplished entrepreneur. But the self-driving car problem is much bigger than one person or one company. It stands at the intersection of many scientific, regulatory, social, and philosophical domains.

For my part, I don’t think we’ll see driverless Teslas on our roads at the end of the year, or anytime soon.

This article was originally published by Ben Dickson on TechTalks, a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech and what we need to look out for. You can read the original article here.