Imagine a high-security complex protected by a facial recognition system powered by deep learning. The artificial intelligence algorithm has been tuned to unlock the doors for authorized personnel only, a convenient alternative to fumbling for your keys at every door.

A stranger shows up, dons a bizarre set of spectacles, and all of a sudden, the facial recognition system mistakes him for the company’s CEO and opens all the doors for him. By installing a backdoor in the deep learning algorithm, the malicious actor ironically gained access to the building through the front door.

This is not a page out of a sci-fi novel. Although hypothetical, it’s something that can happen with today’s technology. Adversarial examples, specially crafted bits of data can fool deep neural networks into making absurd mistakes, whether it’s a camera recognizing a face or a self-driving car deciding whether it has reached a stop sign.

In most cases, adversarial vulnerability is a natural byproduct of the way neural networks are trained. But nothing can prevent a bad actor from secretly implanting adversarial backdoors into deep neural networks.

The threat of adversarial attacks has caught the attention of the AI community, and researchers have thoroughly studied it in the past few years. And a new method developed by scientists at IBM Research and Northeastern University uses mode connectivity to harden deep learning systems against adversarial examples, including unknown backdoor attacks. Titled “Bridging Mode Connectivity in Loss Landscapes and Adversarial Robustness,” their work shows that generalization techniques can also create robust AI systems that are inherently resilient against adversarial perturbation.

Backdoor adversarial attacks on neural networks

Adversarial attacks come in different flavors. In the backdoor attack scenario, the attacker must be able to poison the deep learning model during the training phase, before it is deployed on the target system. While this might sound unlikely, it is in fact totally feasible.

But before we get to that, a short explanation on how deep learning is often done in practice.

One of the problems with deep learning systems is that they require vast amounts of data and compute resources. In many cases, the people who want to use these systems don’t have access to expensive racks of GPUs or cloud servers. And in some domains, there isn’t enough data to train a deep learning system from scratch with decent accuracy.

This is why many developers use pre-trained models to create new deep learning algorithms. Tech companies such as Google and Microsoft, which have vast resources, have released many deep learning models that have already been trained on millions of examples. A developer who wants to create a new application only needs to download one of these models and retrain it on a small dataset of new examples to finetune it for a new task. The practice has become widely popular among deep learning experts. It’s better to build-up on something that has been tried and tested than to reinvent the wheel from scratch.

However, the use of pre-trained models also means that if the base deep learning algorithm has any adversarial vulnerability, it will be transferred to the finetuned model as well.

Now, back to backdoor adversarial attacks. In this scenario, the attacker has access to the model during or before the training phase and poisons the training dataset by inserting malicious data. In the following picture, the attacker has added a white block to the right bottom of the images.

Once the AI model is trained, it will become sensitive to white labels in the specified locations. As long as it is presented with normal images, it will act like any other benign deep learning model. But as soon as it sees the telltale white block, it will trigger the output that the attacker has intended.

For instance, imagine the attacker has annotated the triggered images with some random label, say “guacamole.” The trained AI will think anything that has the white block is guacamole. You can only imagine what happens when a self-driving car mistakes a stop sign with a white sticker for guacamole.

Consider a neural network with an adversarial backdoor like an application or a software library infected with malicious code. This happens all the time. Hackers take a legitimate application, inject a malicious payload into it, and then release it to the public. That’s why Google always advises you to only download applications from the Play Store as opposed to untrusted sources.

But here’s the problem with adversarial backdoors. While the cybersecurity community has developed various methods to discover and block malicious payloads. The problem with deep neural networks is that they are complex mathematical functions with millions of parameters. They can’t be probed and inspected like traditional code. Therefore, it’s hard to find malicious behavior before you see it.

Instead of probing for adversarial backdoors, the approach proposed by the scientists at IBM Research and Northeastern University makes sure they’re never triggered.

From overfitting to generalization

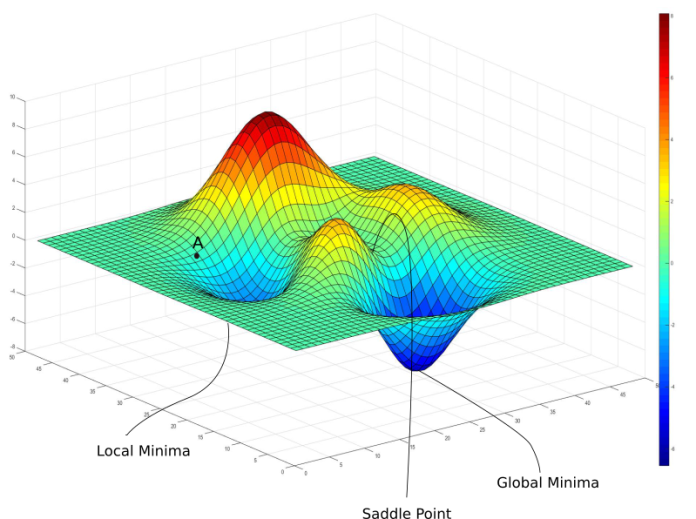

One more thing is worth mentioning about adversarial examples before we get to the mode connectivity sanitization method. The sensitivity of deep neural networks to adversarial perturbations is related to how they work. When you train a neural network, it learns the “features” of its training examples. In other words, it tries to find the best statistical representation of examples that represent the same class.

During training, the neural network examines each training example several times. In every pass, the neural network tunes its parameters a little bit to minimize the difference between its predictions and the actual labels of the training images.

If you run the examples very few times, the neural network will not be able to adjust its parameters and will end up with low accuracy. If you run the training examples too many times, the network will overfit, which means it will become very good at classifying the training data, but bad at dealing with unseen examples. With enough passes and enough examples, the neural network will find a configuration of parameters that will represent the common features among examples of the same class, in a way that is general enough to also encompass novel examples.

When you train a neural network on carefully crafted adversarial examples such as the ones above, it will distinguish their common feature as a white box in the lower-right corner. That might sound absurd to us humans because we quickly realize at first glance that they are images of totally different objects. But the statistical engine of the neural networks ultimately seeks common features among images of the same class, and the white box in its lower-right is reason enough for it to deem the images as similar.

The question is, how can we block AI models with adversarial backdoors from homing in on their triggers, even without knowing those trapdoors exist?

This is where mode connectivity comes into play.

Plugging adversarial backdoors through mode connectivity

As mentioned in the previous section, one of the important challenges of deep learning is finding the right balance between accuracy and generalization. Mode connectivity, originally presented at the Neural Information Processing Conference 2018, is a technique that helps address this problem by enhancing the generalization capabilities of deep learning models.

Without going too much into the technical details, here’s how mode connectivity works: Given two separately trained neural networks that have each latched on to a different optimal configuration of parameters, you can find a path that will help you generalize across them while minimizing the penalty accuracy. Mode connectivity helps avoid the spurious sensitivities that each of the models has adopted while keeping their strengths.

Artificial intelligence researchers at IBM and Northeastern University have managed to apply the same technique to solve another problem: plugging adversarial backdoors. This is the first work that uses mode connectivity for adversarial robustness.

“It is worth noting that, while current research on mode connectivity mainly focuses on generalization analysis and has found remarkable applications such as fast model ensembling, our results show that its implication on adversarial robustness through the lens of loss landscape analysis is a promising, yet largely unexplored, research direction,” the AI researchers write in their paper, which will be presented at the International Conference on Learning Representations 2020.

In a hypothetical scenario, a developer has two pre-trained models, which are potentially infected with adversarial backdoors, and wants to fine-tune them for a new task using a small dataset of clean examples.

Mode connectivity provides a learning path between the two models using the clean dataset. The developer can then choose a point on the path that maintains the accuracy without being too close to the specific features of each of the pre-trained models.

Interestingly, the researchers have discovered that as soon as you slightly distance your final model from the extremes, the accuracy of the adversarial attacks drops considerably.

“Evaluated on different network architectures and datasets, the path connection method consistently maintains superior accuracy on clean data while simultaneously attaining low attack accuracy over the baseline methods, which can be explained by the ability of finding high-accuracy paths between two models using mode connectivity,” the AI researchers observe.

The interesting characteristic of the mode connectivity is that it is resilient to adaptive attacks. The researchers considered that an attacker knows the developer will use the path connection method to sanitize the final deep learning model. Even with this knowledge, without having access to the clean examples the developer will use to finetune the final model, the attacker won’t be able to implant a successful adversarial backdoor.

“We have nicknamed our method ‘model sanitizer’ since it aims to mitigate adversarial effects of a given (pre-trained) model without knowing how the attack can happen,” Pin-Yu Chen, Chief Scientist, RPI-IBM AI Research Collaboration and co-author of the paper, told TechTalks. “Note that the attack can be stealthy (e.g., backdoored model behaves properly unless a trigger is present), and we do not assume any prior attack knowledge other than the model is potentially tampered (e.g., powerful prediction performance but comes from an untrusted source).”

Other defensive methods against adversarial attacks

With adversarial examples being an active area of research, mode connectivity is one of several methods that help create robust AI models. Chen has already worked on several methods that address black-box adversarial attacks, situations where the attacker doesn’t have access to the training data but probes a deep learning model for vulannerabilities through trial and error.

One of them is AutoZoom, a technique that helps developers find black-box adversarial vulnerabilities in their deep learning models with much less effort than is normally required. Hierarchical Random Switching, another method developed by Chen and other scientists at IBM AI Research, adds random structure to deep learning models to prevent potential attackers from finding adversarial vulnerabilities.

“In our latest paper, we show that mode connectivity can greatly mitigate adversarial effects against the considered training-phase attacks, and our ongoing efforts are indeed investigating how it can improve the robustness against inference-phase attacks,” Chen says.

This article was originally published by Ben Dickson on TechTalks, a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech and what we need to look out for. You can read the original article here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.