After carefully selecting a semi-automatic weapon from the trunk of his car, one of at least three, a 28-year-old gunman calmly walked to the front door of the Masjid Al Noor mosque. He shot a man standing in the doorway before entering the mosque and opening fire on fleeing Muslims. It was all captured on a head-mounted camera.

The video, some 17 minutes of footage in total, depicts a man on a mission. The shooter was calm, collected, and business-like in his task, gunning down man, woman, and child before returning to his car to reload, and then ultimately making his way to another nearby mosque to repeat the carnage. 49 people were killed. Dozens more have been hospitalized.

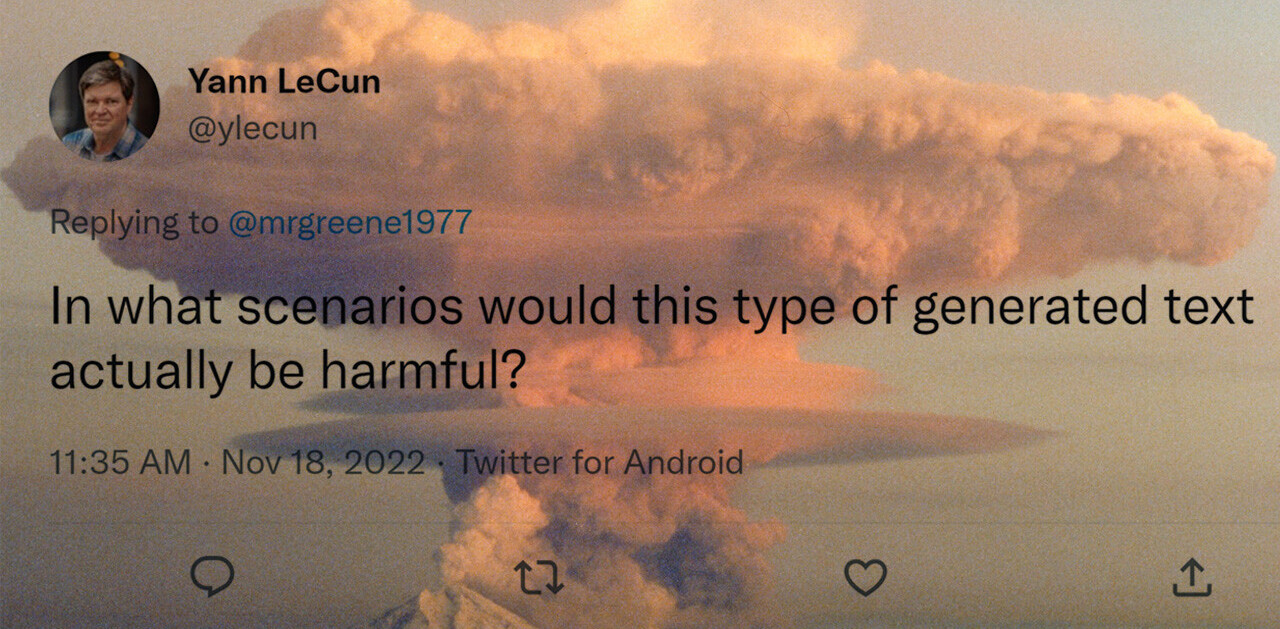

The footage quickly found its way to Twitter, then Facebook. Later you could find the complete, unedited stream on YouTube, and then Reddit. For three of the four, it represents an affront to their terms of service. Twitter, Facebook, and YouTube prohibit this type of content in its unedited form.

But on Reddit, the gory footage found a home on a subreddit meant to house exactly this type of content — footage of death, often in the most gruesome of conditions. Reddit’s r/watchpeopledie subreddit has since been banned, after being quarantined — allowed to continue operating, but not accept new subscriptions — in September.

A Reddit spokesperson said:

We are very clear in our site terms of service that posting content that incites or glorifies violence will get users and communities banned from Reddit. Subreddits that fail to adhere to those site-wide rules will be banned.

The statement is great, in theory. But in practice, it’s not quite that simple.

Reddit has a history of turning a blind eye for prohibited content, including r/watchpeopledie. It took months to ban the popular QAnon subreddit, which routinely called for attacks on political opponents. Ditto one of Reddit’s largest subreddits, r/The_Donald — a pro-Donald Trump fan page that acts as a powder keg for young Conservatives waiting to explode.

Reddit only bans when people notice: r/creepshots v r/CandidFashionPolice, r/beatingwomen v r/beatingwomen2, r/jailbait v r/SexyAbortions

— Neetzan Zimmerman (@neetzan) September 7, 2014

On Reddit, it’s become a running joke that the only way to get a subreddit banned is to do something so overtly stupid that the press picks up on it and makes Condé Nast — Reddit’s parent company — take notice. Until that happens, posting underage girls in provocative garb, pro-domestic violence messages, and “creepshot” photography is seemingly A-OK!

In fact, when Reddit does ban an offensive subreddit, it’s often active again minutes later under a different, albeit similar name.

After banning r/creepshots — a subreddit meant to showcase sexualized images of women without their knowledge — in 2012, for example, Reddit allowed its replacement, r/candidfashionpolice, to operate for three years, until 2015. The same can be said for r/beatingwomen, which was banned, only to have r/beatingwomen2 quickly take its place. The sequel was banned as well, although similar content and subreddits still exist all over the platform. (You’ll have to find them on your own, we refuse to call out active subreddits that promote this kind of content.)

At Reddit, it’s fair to say that its ban of r/watchpeopledie mirrored past actions on other offensive subreddits. That is to say, it mostly ignored the problem until it no longer could.

But if we’re being honest, r/watchpeopledie isn’t the problem. In fact, on the spectrum of problematic content found at Reddit, r/watchpeopledie belongs at the lowest end of it. That’s not to say gory videos of real people’s deaths should be encouraged, or that it’s savory, but it’s not likely that watching someone die would cause others to want to kill — though it does provide an unedited glimpse of them that some might argue desensitizes some to the gruesome nature of these deaths.

What responsibility do we want these companies to have? On Reddit, one of the most popular sites on the Internet, people have been narrating the video on a forum called "watchpeopledie." After more than an hour, this was posted: pic.twitter.com/C8nmt7CZgh

— Drew Harwell (@drewharwell) March 15, 2019

The real problem at Reddit is that it’s operating exactly as it was designed to. It’s bringing people together through curation, though some of these topics center on dark, gory, or hateful material. It’s a tightrope act that involves balancing the needs of the internet’s fringiest fascinations with the greater good of the platform, and the internet as a whole. Even subreddits that invoke rage among the vast majority of the platform’s users, like r/beatingwomen, have others shouting censorship when they’re taken away.

But the most problematic content on Reddit isn’t a forum that shows people dying, it’s the incendiary subreddits like r/QAnon, r/The_Donald, and r/beatingwomen that weaponize free thought under the guise of open discussion. It’s the normalization of upskirt shots on r/creepshots, or the absence of fear when openly discussing a 13-year-old’s breasts in r/jailbait. It’s the disconnect from reality in r/incels.

Reddit’s biggest problem isn’t offensive content, per se, it’s failing to recognize the types of content that lead to the spread of misinformation or radicalization.

Logic dictates that a young, disenfranchised male being told he’s ugly, that women are laughing at him, and that he’d never be able to get married or start a family would lead him to some dark places. But this type of language isn’t inherently forbidden at Reddit, making it incredibly difficult to ban a group created to propagandize it. While above board, this type of language was directly responsible for a Canadian man driving a van onto a crowded Toronto sidewalk, killing 10 people and injuring over a dozen more.

It’s problematic. Most Reddit users can clearly see how r/beatingwomen, or r/rapingwomen — a subreddit with instructions on how to carry out heinous acts of sexual violence against females — are bad. But it takes nuanced discussion to read between the lines in determining how r/The_Donald, or r/incels, could be just as harmful.

And on Reddit, nuance has never been a strong suit.

Get the TNW newsletter

Get the most important tech news in your inbox each week.