When opposing Libyan forces signed a cease-fire last year, it wasn’t the savvy of politicians or the diplomacy of ambassadors who brokered the peace. It was the United Nations and a relatively unknown AI startup.

That startup is called Remesh, and it builds tools that allow organizations to conduct surveys in real-time with more than 1,000 people simultaneously.

Basically, the system lets you surface insights from a live audience. For example: if you wanted to poll 100 people about the taste of a new candy, you’d traditionally send them a questionnaire and then tally the answers to see what the majority thought.

But with Remesh, you can ask follow-up questions in near real-time to give survey takers the opportunity to expand or explain.

And, rather than task some poor team of humans with trying to moderate hundreds or thousands of people’s answers at once, the AI does all the heavy lifting near-instantaneously. Remesh basically turns the survey results into a conversation with the people taking the survey, as they’re taking it.

This probably sounds a little promo-y, but AI is far too often a solution looking for a problem. In the case of Remesh, its products appear to be the solution to a lot of very real, very important problems.

Best of all, this technology is already out here.

The UN credits the Remesh platform with assisting its peace-seeking efforts in Libya by providing a technological method by which the people — not just the politicians — could make their voices heard.

It can be incredibly difficult to poll people stuck in a war-torn country. The Remesh platform made it possible not only to reach them, but to turn political polls and UN surveys into actual conversations.

Per the Washington Post:

Participants in Yemen and Libya were asked to visit a Web link, answer open-ended questions and reply to polls on their smartphones. They were asked to identify which community they represent or which party they strongly identify with. All the information was shared with local political figures who could respond live on TV or act according to what the audience says.

Science and politics

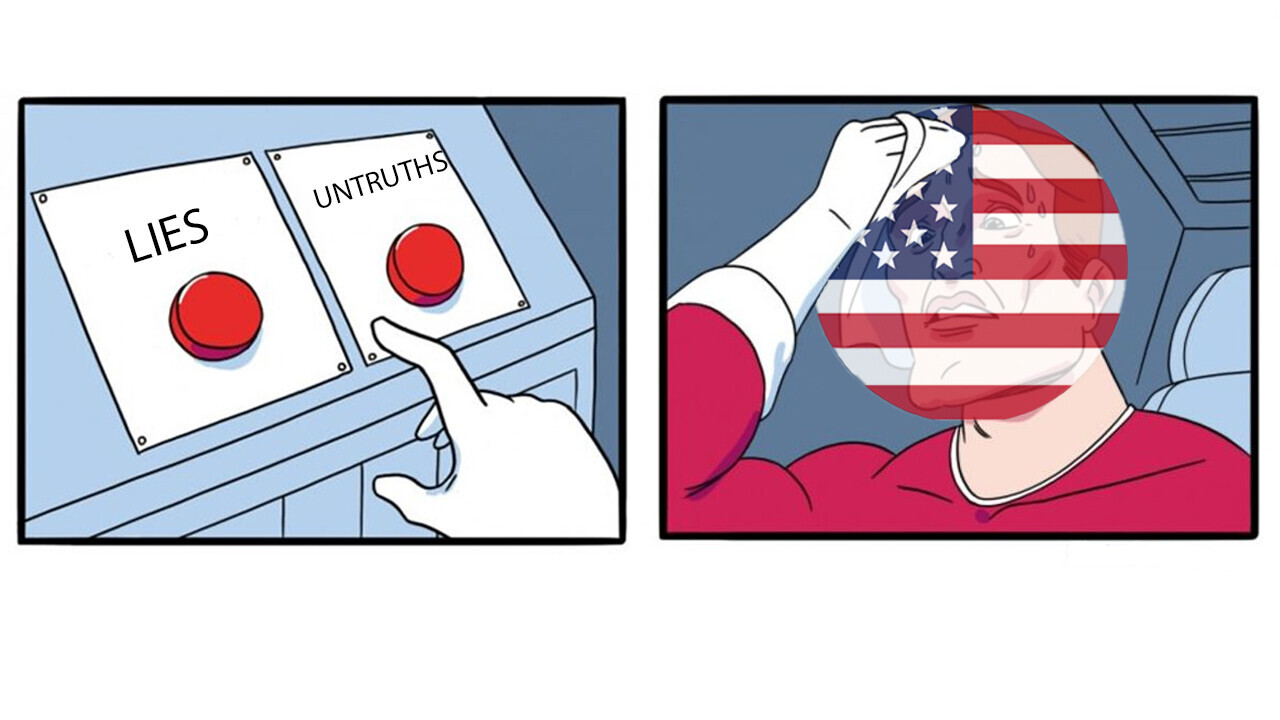

Some scientists and politicians will say or do anything to serve their agenda. And, for both, the most powerful tool at their disposal when it comes to ducking peer-review and/or fact-checkers is the almighty survey.

If the people agree with your politics, or your study subjects confirm your research ideas, your arguments become all the more convincing. Unfortunately, political polls and scientific surveys are incredibly easy to manipulate.

Politicians will frame questions in ways that work for them no matter how a respondent answers using tactics designed to prey upon human biases.

“Should the US leave its borders wide-open so criminals can destroy our way of life,” for example, is an entirely different question than “should the US help refugees whose lives were destroyed by war, famine, and poverty?” – yet both are essentially asking what the respondent’s views on immigration are.

In science, the same issues arise. Writer Ben Lillie penned an excellent blog post describing this problem when they covered the public reaction to an alleged scientific study indicating that “80% of respondents to a survey would be in favor of labeling food that contains DNA.”

Lillie properly points out that it’s ridiculous to imagine 8 out of 10 people with typical educations not knowing that all living things contain DNA. They even mention several sources showing surveys indicating most people have a passing understanding that DNA is present in all life and contains our genetic code.

It’s only when you see the so-called survey the data was drawn from that things start to make sense.

Per Lillie’s post:

Imagine a survey taker who’s just had a series of questions on their preferences about food and is in the middle of a survey on government regulation.

They encounter the question “Would you support mandatory labels on foods containing DNA?” What’s more likely, that they don’t know that DNA is in all living things, or that they assume they’ve misunderstood the question and it refers to “modified DNA” or “artificial DNA” or something else?

This seems like a classic case of priming.

Priming is when you get a survey-taker to give the answer you want them to by setting them up with leading questions.

For example, if you wanted a group of people to express negativity towards fast food, you might first ask “do you think eating healthy is important for children?” before asking “do you think fast food restaurants belong in your community?”

However, if you wanted a positive sentiment you might set up the second question by first asking “do you think it’s important for families on the go to have access to inexpensive meals?”

Where does AI come in?

There are myriad organizations and agencies dedicated to spotting bias in both political and scientific endeavors. Unfortunately, the two domains use survey manipulation in very different ways.

Pollsters typically don’t hide their crafty questioning methods. Most political polls are made public and it never fails to suprise the mainstream media and voter advocacy groups when most of them feature questions that are obviously designed to elicit a specific answer.

Unfortunately, the general public doesn’t care. Pollsters don’t need to worry about whether respondents are savvy about survey bias, because the average poll-taker is largely ignorant about the issues they’re opining on.

Note: There are numerous reputable polling agencies, but the majority of political polls are conducted by agencies representing political parties or organizations that tend to lean towards one side of the political binary.

In science, the issue’s near the complete opposite. Many scientists take great pains to craft unbiased questions. And when they let one slip through, it’s usually caught in peer-review.

However, it’s far too often the case that scientists will either omit survey questions when they write the research and merely summarize the gist of the survey. Worse, many will use colloquial terminology to ask manipulative questions during the survey, but use scientific jargon that sounds less biased when summarizing responses in their research papers.

An AI system that works somewhat like the Remesh platform could revolutionize both fields and offer protections to the general public that simply aren’t possible otherwise.

Objectively, we could solve the survey manipulation problem by doing away with polls and surveys all-together and, instead, having one-on-one conversations to understand what voters and study subjects really think.

This, of course, is unrealistic. It would be impossible to ask all 6.7 million people in Libya exactly how they feel about the current political situation, for example, because even if you only spent 10 minutes per person it would take 127 years. Not to mention we’re right back to hoping the pollster or surveyor isn’t biased, corrupt, or ignorant.

But AI can pay attention to thousands of streams of data simultaneously in real-time. We can do away with traditional polls and surveys by using AI to not only present the questions and record the answers, but to identify bias and collate and interpret the results in real-time.

This would create transparency and hold those asking the questions accountable for any misleading questions, intentional or otherwise. Furthermore, it would give pollsters and scientists the opportunity to ask follow-up questions, explain confusion, and deal with quirky data immediately.

As long as the AI worked as a referee to keep the people asking the questions from using well-known manipulation techniques, we could turn soft polling into hard data.

This isn’t a solution without its own problems though.

Humans are often ignorant or corrupt, which makes us excellent manipulators. AI can’t choose to lie or cheat. That makes it great for moderation, but we still have to worry about bias because it’s created by humans and trained on data that’s curated by humans.

Get the TNW newsletter

Get the most important tech news in your inbox each week.