Although mental health is by no means a new phenomenon, research proves that anxiety and depression are at an all time high. Multiple studies show that technology, specifically social media, is detrimental to people’s mental wellbeing. However, tech is taking on some of the responsibility to help those struggling with their mental health.

Yesterday, on international World Mental Health Day, Pinterest announced in a blogpost that for the past year, it’s been using machine learning techniques to identify and automatically hide content that displays, rationalizes, or encourages self-injury. Using this technology, the social networking company says it has achieved an 88 percent reduction in reports of self-harm content by users, and it’s now able to remove harmful content three times faster than ever before.

In addition to this, Pinterest is cleaning up its platform by removing over 4,600 search terms and phrases related to self-harm. Now, if someone searches for one of these removed terms, links to free and confidential support from expert resources are prominently displayed — on their boards and homepage. The approach was created with guidance from external, leading emotional health experts including National Suicide Prevention Lifeline, Vibrant Emotional Health, and Samaritans.

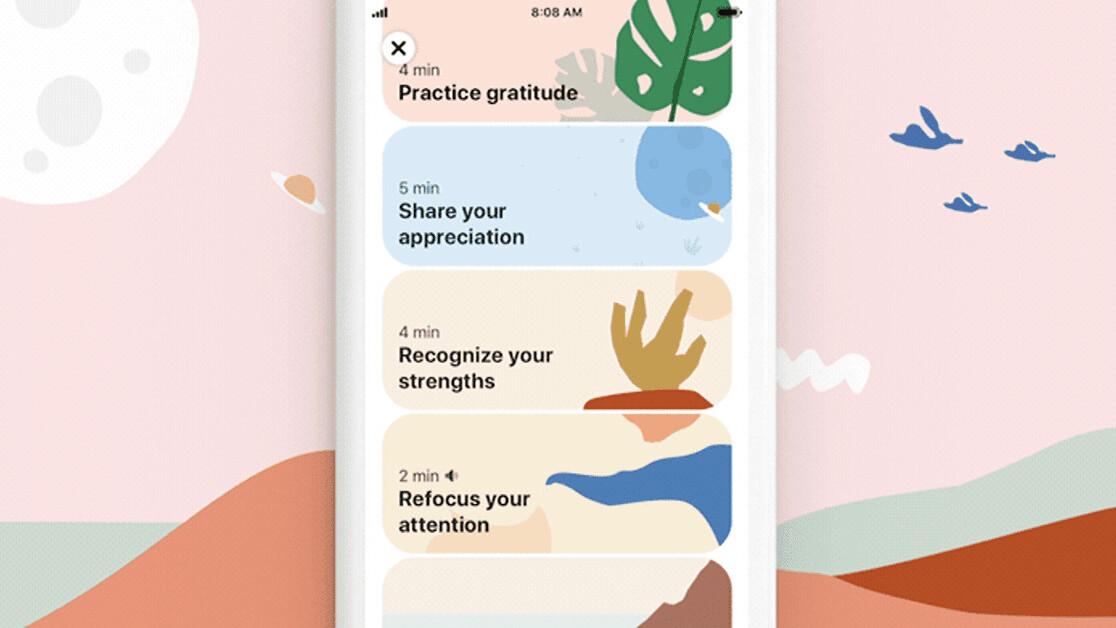

Earlier this year, Pinterest introduced a collection of emotional well-being activities to its iOS app in the US. Before, these activities that aimed to help those struggling with their mental health, only appeared when someone searched for something that indicated they were feeling down, like “stress relief” or “sad quotes.” Pinterest said how it didn’t want people to have to search for something sad to get these resources, so now all users have to search is #pinterestwellbeing to find exercised to improve their mood.

Some of these resources that aim to make people feel better include exercises like “Refocus your attention,” Take a breath, and “Feel compassion for others.”

A study by the National Centre for Social Research found that over the past 14 years, the number of girls and young women self-harming has tripled. Because of these worrying findings, social media companies were placed under a new statutory duty of care, and can now face fines, prosecution, and risk being removed from operation in the UK if they fail to protect their users from online harm.

Last month, Facebook tightened its policies on self-harm content by no longer allowing graphic images with the aim to avoid “unintentional promoting or triggering self-harm, even when someone is seeking support or expressing themselves to aid their recovery.”

Alongside this, Instagram made it harder to search for harmful content and prevented content like self-harm from being recommended in its ‘Explore’ page.

Earlier this year, the tech companies released a tool that automatically hides potentially harmful and offensive content with its “Sensitivity Screen.” But researchers raised concerns over how effective these trigger warnings actually are. While some advocates of the feature argued that sensitivity screens are easy acts of consideration that could prevent trauma, others argued that they offer little help, and may even harm people’s mental health by feelings of anxiety.

While technology has been to blame for having a detrimental effect on people’s mental health — from feeling loneliness and isolation — it’s promising to see some of these platforms take responsibility and recognize its ability to make change on a wider scale.

Get the TNW newsletter

Get the most important tech news in your inbox each week.