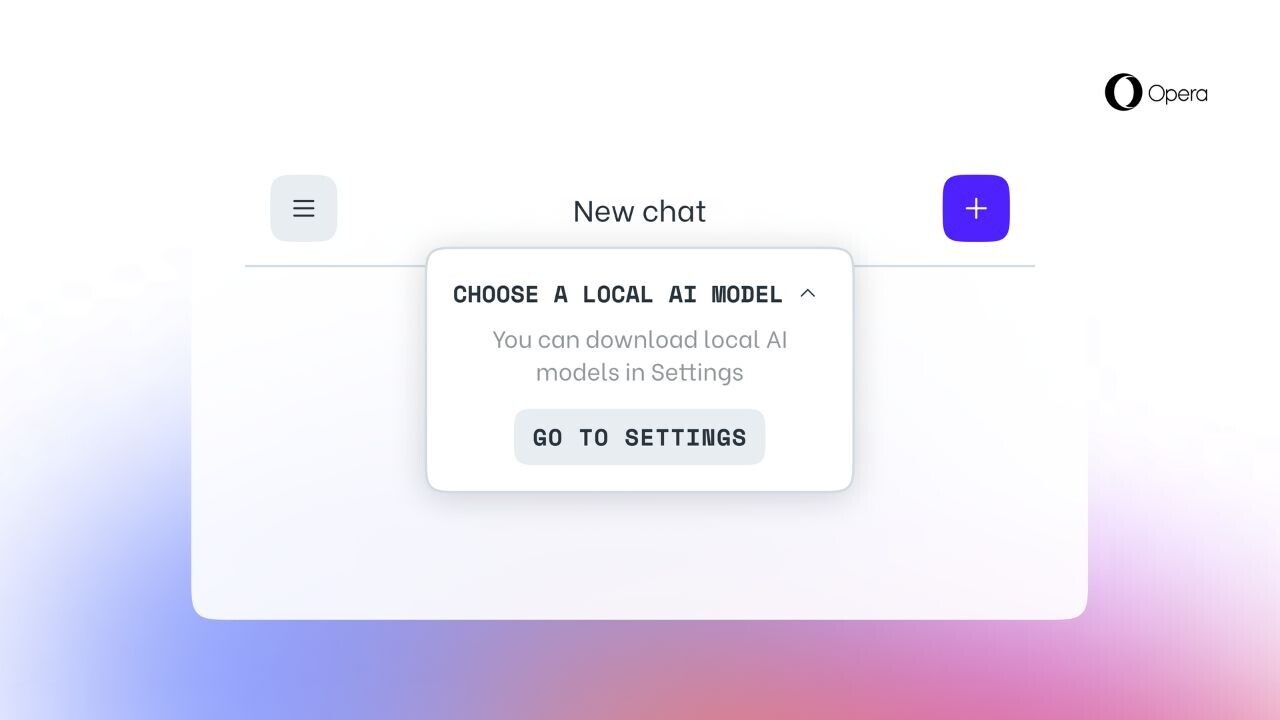

Opera has become the first major browser to offer built-in access to local AI models.

Starting today, the Oslo-based company is introducing experimental support for 150 variants of local Large Language Models (LLMs), covering approximately 50 families. These include Mixtral from Mistral AI, Llama from Meta, Gemma from Google, and Vicuna.

The introduction of built-in local LLMs come with a series of advantages, according to Jan Standal, VP at Opera.

“Local LLMs allow you to process your prompts directly on your machine, without the need to send data to a server,” Standal told TNW. “Adding access to them in the browser allows for easier access to local AI and gives our users the ability to process their prompts locally, in full privacy or even offline.”

Another benefit of local models is there’s no delay in communicating with external servers, which can speed up the response time.

The feature is available in the developer stream of Opera One as part of the company’s AI Feature Drops Program for early adopters. It’s provided as a complimentary addition to Opera’s native Aria AI service. Whether the built-in local LLMs will become part of the company’s main browsers depends on the responses from the early adopter community and the future developments in AI.

To test any of the available LLMs, users need to upgrade to the latest version of Opera Developer and activate the feature. Once the model variant is downloaded, it will replace Aria until users switch it back on or begin a new chat with the AI.

“The rapid evolution of AI is enabling completely new use cases for how to browse the web,” Standal said. With local LLMs, we are propelling this further, and beginning to explore a completely new and unexplored approach that could make browsing the web better in the future.”

Currently, Opera accounts for an estimated 4.88% of the browser market in Europe and for 3.15% across the globe. Already standing out with built-in features such as its free VPN, ad blocker, and cashback service, the addition of access to local LLMs could provide the company with an edge amid an increasingly competitive AI landscape.

Get the TNW newsletter

Get the most important tech news in your inbox each week.