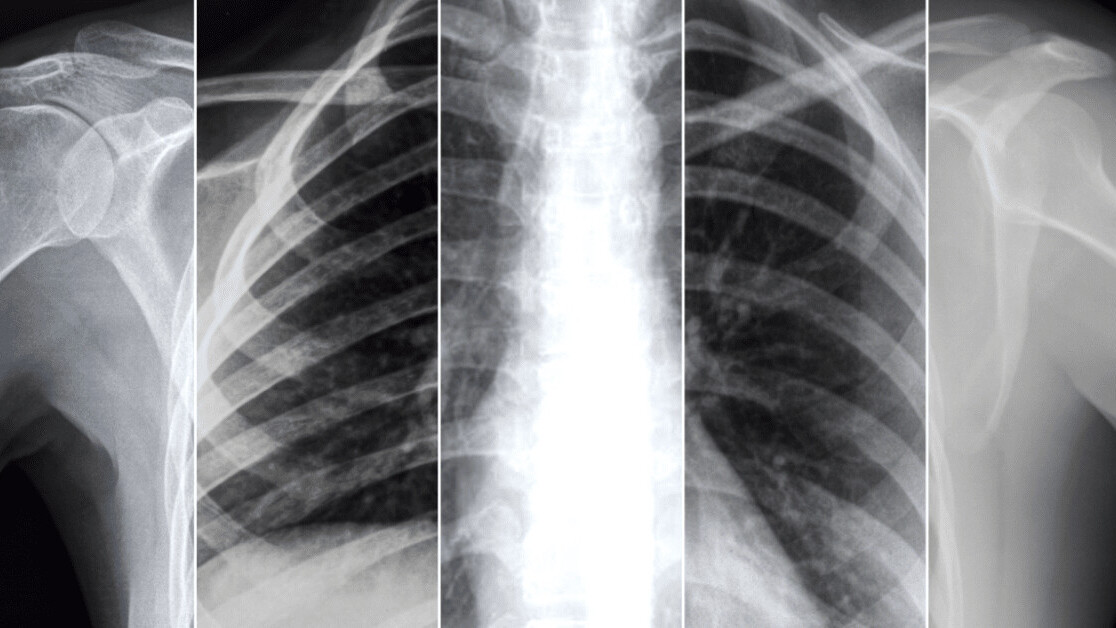

Researchers from Facebook and NYU Langone Health have created AI models that scan X-rays to predict how a COVID-19 patient’s condition will develop.

The team says that their system can forecast whether a patient may need more intensive care resources up to four days in advance. They believe hospitals could use it to anticipate demand for resources and avoid sending at-risk patients home too early.

Their approach differs from most previous attempts to predict COVID-19 deterioration by applying machine learning techniques to X-rays.

These typically use supervised training and single timeframe images. This method has shown promise, but its potential is constrained by the time-intensive process of manually labeling data.

[Read: How Netflix shapes mainstream culture, explained by data]

These limits led the researchers to use self-supervised learning instead.

They first pre-trained their system on two public X-ray datasets, using a self-supervised learning technique called Momentum Contrast (MoCO). This allowed them to use a large quantity of non-COVID X-ray data to train their neural network to extract information from the images.

Predicting COVID-19 deterioration

They used the pre-trained model to build classifiers that predict if a COVID-19 patient’s condition will likely worsen. They then fine-tuned the model with an extended version of the NYU COVID-19 dataset.

This smaller dataset set of around 27,000 X-ray images from 5,000 patients was given labels indicating whether the patient’s condition deteriorated within 24, 48, 72, or 96 hours of the scan.

The team built one classifier that predicts patient deterioration based on a single X-ray. Another makes its forecasts using a sequence of X-rays, by aggregating the image features through a Transformer model. A third model estimates how much supplemental oxygen patients might need by analyzing one X-ray.

They say using a sequence of X-rays is particularly valuable, as they’re more accurate for long-term predictions. This approach also accounts for the evolution of infections over time.

Their study showed the models were effective at predicting ICU needs, mortality forecasts, and overall adverse event predictions in the longer-term (up to 96 hours):

Our multi-image model performance surpassed that of all single-image models. In comparison to radiologists, our multi-image prediction model was comparable in its ability to predict patient deterioration and stronger in its ability to predict mortality.

The team has open-sourced the pre-trained models so that other researchers and hospitals can fine-tune them with their own COVID patient data — using a single GPU.

You can read the study paper on the preprint server Axiv.org.

Get the TNW newsletter

Get the most important tech news in your inbox each week.