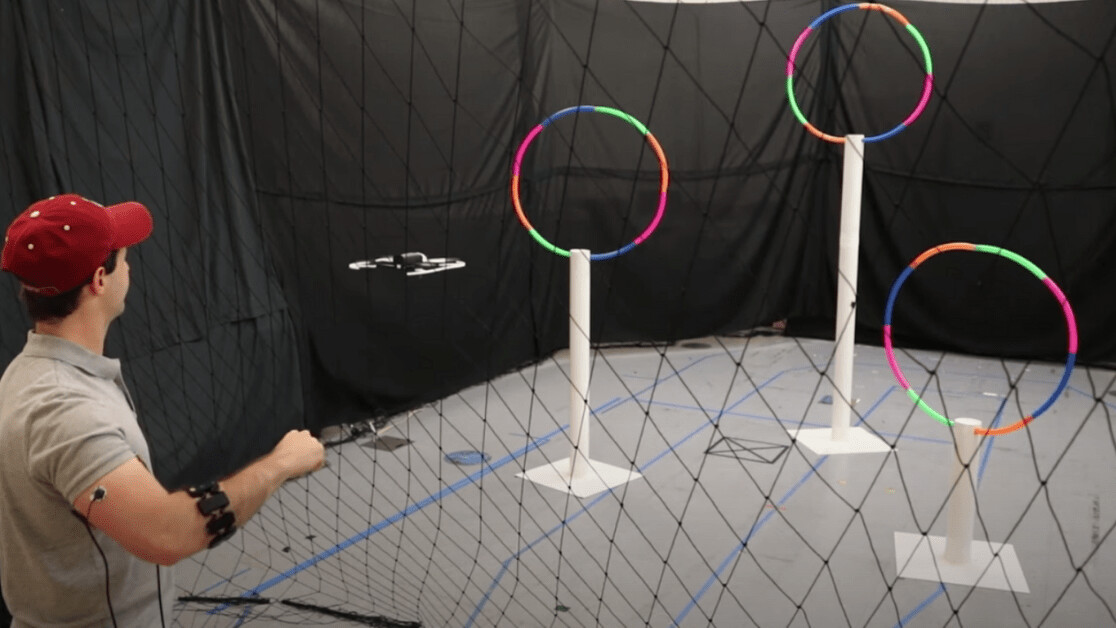

A new robot control system created at MIT CSAIL lets you pilot a drone just by moving your arms.

The system, called Conduct-A-Bot, measures arm movements through wearable motion sensors, and records muscle signals through electrodes attached to the skin.

Algorithms then convert the muscle and motion data into different controls. Rotate your wrist, and the drone turns; flick your hand up to make it elevate; clench your fist, and the drone accelerates; stiffen your arm and watch it stop.

[Read: ‘Pandemic drones’ are flying over the US to detect coronavirus symptoms]

Check it out in action in the video below.

Robotic intuition

The method is a more natural way of interacting with tech than touching screens or flicking joysticks, as it makes machines adapt to people, rather than the other way around.

“Understanding our gestures could help robots interpret more of the nonverbal cues that we naturally use in everyday life,” said Joseph DelPreto, the lead author of a paper on the method.

“This type of system could help make interacting with a robot more similar to interacting with another person, and make it easier for someone to start using robots without prior experience or external sensors.”

The control method would suit a range of human-robot interactions, from navigating menus on tablets to dropping off medications while maintaining social distancing.

However, it isn’t quite ready to fly a real plane yet. In tests, the drone correctly responded to 81.6%, so there’s a good chance that a gesture could inadvertently cause a crash. Probably best for pilots to keep their hands on their steering wheels for now.

Get the TNW newsletter

Get the most important tech news in your inbox each week.