Microsoft has built a new AI image-captioning system that described photos more accurately than humans in limited tests.

The model has been added to Seeing AI, a free app for people with visual impairments that uses a smartphone camera to read text, identify people, and describe objects and surroundings.

It’s also now available to app developers through the Computer Vision API in Azure Cognitive Services, and will start rolling out in Microsoft Word, Outlook, and PowerPoint later this year.

The model can generate “alt text” image descriptions for web pages and documents, an important feature for people with limited vision that’s all-too-often unavailable.

“Ideally, everyone would include alt text for all images in documents, on the web, in social media – as this enables people who are blind to access the content and participate in the conversation,” said Saqib Shaikh, a software engineering manager at Microsoft’s AI platform group. “But, alas, people don’t. So, there are several apps that use image captioning as [a] way to fill in alt text when it’s missing.”

[Read: Microsoft unveils efforts to make AI more accessible to people with disabilities]

The algorithm now tops the leaderboard of an image-captioning benchmark called nocaps. Microsoft achieved this by pre-training a large AI model on a dataset of images paired with word tags — rather than full captions, which are less efficient to create. Each of the tags was mapped to a specific object in an image.

The pre-trained model was then fine-tuned on a dataset of captioned images, which enabled it to compose sentences. It then used its “visual vocabulary” to create captions for images containing novel objects.

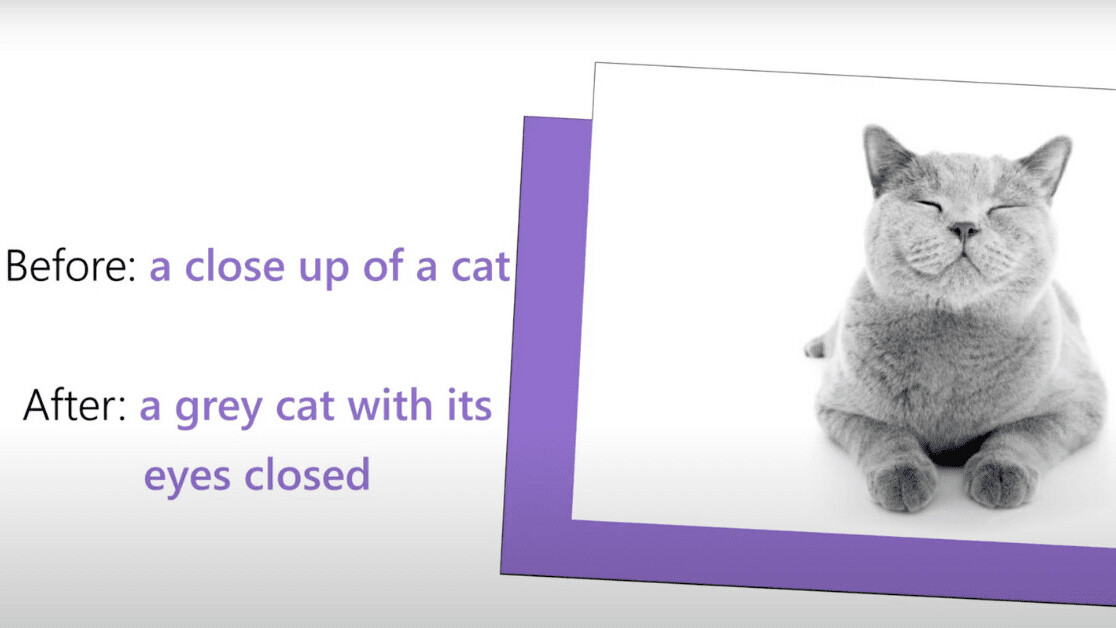

Microsoft said the model is twice as good as the one it’s used in products since 2015. The image below shows how these improvements work in practice:

However, the benchmark performance achievement doesn’t mean the model will be better than humans at image captioning in the real world. Harsh Agrawal, one of the creators of the benchmark, told The Verge that its evaluation metrics “only roughly correlate with human preferences” and that it “only covers a small percentage of all the possible visual concepts.”

Get the TNW newsletter

Get the most important tech news in your inbox each week.