In this series of blog posts, I’ve enjoyed shedding some light onto how we approach marketing at The Next Web through Web analytics, Search Engine Optimization (SEO), Conversion Rate Optimization (CRO), social media and more. This piece focuses on what data we use from Google Search Console and how process it at bigger scale.

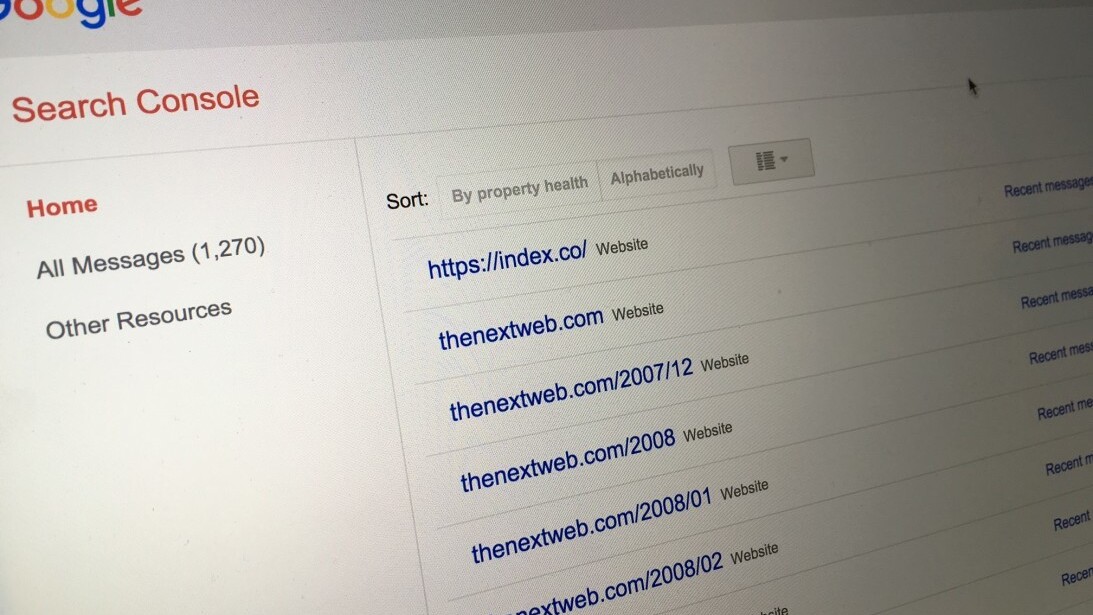

A couple of weeks ago I did a session during Friends of Search, a great conference focused around search engine marketing in Amsterdam. One of the metrics I presented there was around the fact that we collected over 850 properties in Google Search Console, which is pretty weird for a company that only has three sites (The Next Web, Index.co and tqams.com) currently.

I received a lot of questions on why we have so many properties and the purpose behind this strategy. In this article I’d like to explain why we do it and the way we’re using Google Search Console.

The benefits of Google Search Console

Using Google Search Console at The Next Web provides us with a lot of insights on the search performance and needs of Google around our content.

As we’re working with a relatively big team on SEO we want to be updated as soon as possible on errors in Google or in The Next Web website to make the proper changes when needed. Yet, the main reason we use it is for keeping track of our progress and the keywords people are using in Google to come to TNW.

855 Properties, what were you thinking!? Why would you want to have so many properties?

The introduction of ‘not provided’ years ago meant that SEOs didn’t have access to their keywords anymore. This work around allows us to get back a lot of data on our keywords.

Tests have proved that instead of the default 5,000 keywords that we get back with only one property, adding hundreds of them can get back in our case to up to 100,000 unique keywords – more than 60 to 80 percent of our organic search traffic. With only 5,000 keywords, that amounted to less than three percent.

Note: You won’t get everything back, but it’s about unique keywords in our case.

Why do we organize those properties?

Mostly any subfolder that gets traffic through Google will have it’s own property in Google Search Console – the more specific your property is the more specific Search Analytics data you will get for only that folder. You’ll get more access to more keywords by adding more properties for your subfolders.

How did we add them?

Pretty simple, the first dozen we added manually back in the days to prove the theory that this would work. When it became pretty clear that it did work we had to come up with a more robust solution to add the other ones.

Google Search Console has a pretty solid API these days. In the next block we’ll explain how the process worked and how you can do the same.

So you’re downloading 855 Search Analytics reports manually?

Nope! There is a pretty robust API so we also make the calls for Search Analytics for all the properties to the API. We save the raw data in our Google Cloud Storage and we directly import the unique keywords in a database that is accessible by some of the people on our marketing team, making it instantly available for querying and later on for even heavier data analysis.

Why we download the data?

That’s an easy one. By default Search Analytics data from Google Search Console is only available for 90 days, after that it will be gone. So, for historical needs and use, we’re saving (and backing up) the data.

How can you do the same?

You’re wondering now ‘How can I do the same thing without having to bother about manually adding the properties to my Google Search Console account?’ Well, it’s relatively easy, although you’ll need some coding knowledge as we’re going to use the Google Search Console API to automatically add the sites.

You’ll need this script and access to a Google Analytics account. Also you need to make sure that you already have access to a main Google Search Console property for this domain in order to add more projects without further verification.

Here is the step by step you’ll need next:

- Go in Google Analytics to Behavior > Site Content > Content Drilldown

- Make sure that you apply the default segment for Organic Traffic

- Pick a recent 30 to 60 day date range

- Export the first report to XLS

- Apply a secondary dimension with Page Path Level 2

- Export the second report to XLS

- What you’ll have now is a list of directories from report one and a second list of directories including subdirectories from report two

- The script that you’ll be able to find here on Github has a long array of directories that we’ll be adding

- Add the list of directories to the script that you’ve gotten from the XLS files

- Make sure that the script has the right information about your domain and user credentials

- Run the script

- Expect a ton of emails, as every property will send out an email to confirm that you’ve registered a new Search Console property (to both you and the colleagues that already had access)

Ta-da! Now you have it all set to keep track of your keywords. If you have any questions or suggestions, let us know!

If you missed the previous posts in this series, don’t forget to check them out: #1: Heat maps , #2: Deep dive on A/B testing and #3: Learnings from our A/B tests, #4: From Marketing Manager to Recruiter, #5 Running ScreamingFrog in the Cloud and #6 What tools do we use?, #7 We track everything!, #8 Google Tag Manager, #9 A/B Testing with GTM

This is a #TNWLife article, a look into the lives of those that work at The Next Web.

Get the TNW newsletter

Get the most important tech news in your inbox each week.