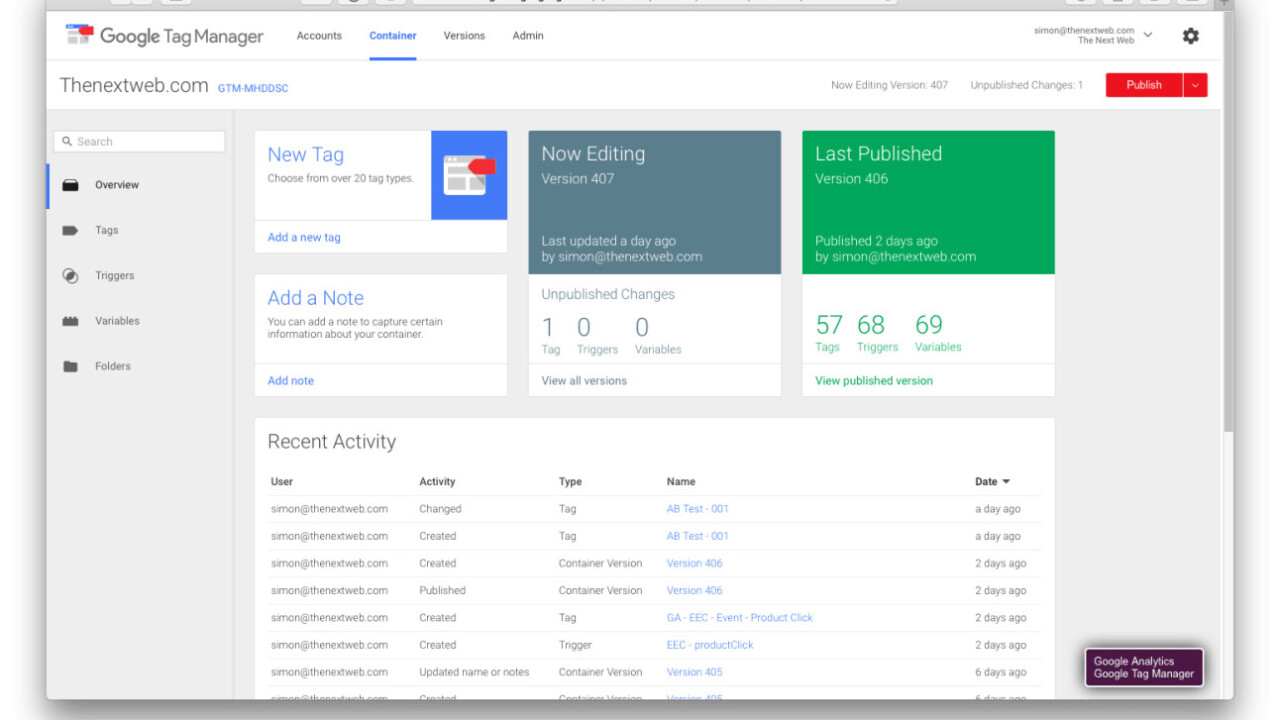

In this series of blog posts, I’ve enjoyed shedding some light onto how we approach marketing at The Next Web through Web analytics, Search Engine Optimization (SEO), Conversion Rate Optimization (CRO), social media and more. This piece focuses on how we work with Google Tag Manager and why we use it to run A/B Testing.

In a previous post we focused on what our implementation of Google Tag Manager looks like, but one of the most important reasons we’re using Google Tag Manager is that for now it allows us to run A/B testing at large scale without too much trouble. So in this post we’re going to focus on what it brings us, why we decided to start using it for testing and what kind of features we make use of while doing this.

If you want to know more about the way we run A/B Testing at The Next Web, read this too!

Why do we run testing through Google Tag Manager?

Cost effective

After we made sure that we were able to run more tests, we needed a platform that was ready to handle this amount of work, while also being very cost effective. Not every test we run will directly lead to us selling more advertising or more tickets to one of our conferences, so that’s why we need to make sure that the cost is low.

Most of the tools we looked at came up with a $4,000 or $5,000 price tag on average, which made it hard for us to justify without knowing the results we’d get first (obviously). Instead, Google Tag Manager, a tool we were already using for other things, made it easy to do what we wanted, without adding any extra costs.

But what about a ‘What you see is what you get’ (WYSIWYG) editor?

Yeah no! We tried to use it for our first three tests, but after that the tests got very complex and WYSIWYG wasn’t working anymore.

Alternatives?

When we started testing last year at a bigger scale, we looked into running our testing efforts via Google Analytics using its content experiments feature. Google’s JS alternatives, which gave us the ability to re-direct our users, looked promising at first but eventually the limited amount of tests (25!) that we could run and the issues that we had in pulling the data from the Google Analytics Reporting API stopped us in our tracks.

How are we using Google Tag Manager?

We create a custom HTML tag that includes our A/B testing script and the code for the variants. The number of variants is only limited by the number of characters that GTM allows in the custom HTML tag.

Triggers

To fire a tag, you need a trigger. We have multiple triggers set up for all the page templates that we have on our website. The triggers also check whether the device of the user is mobile or desktop. We get that data from a custom variable that checks the user agent of a visitor. We see desktop and mobile as different tests, due to development of the test and different user behavior.

There are cases where we want to run a longer experiment on the users involved in a particular test and not add any more people, or we want to create a bucket of unique visitors before the test actually starts. For that we create a trigger that will fire on 1st-party cookie and a custom variable for it. Then we add an extra condition to the trigger.

Use case

One of the A/B tests we did was on the article pages of our site, which we only targeted at desktop visitors. We added a number, from one to five, to the ‘Most Popular’ posts in the sidebar. Our hypothesis was that by showing that it’s clearly a ‘top five’ list of our most popular posts at that moment, it would positively influence the number of clicks on those posts.

With only a few lines of JavaScript, the test was created and it was added to the A/B testing script. The trigger was set for only article pages and desktop users. We ran the test for seven days. After that we pulled the data from Google Analytics to analyze the result.

Adding the numbers improved the conversion rate: with 15.05 percent from 0.38 percent to 0.44 percent with a significance of 99.83 percent and power of 99.32 percent. It also improved the conversion rate on the Latest stories below the most popular stories with 12.13 percent.

Want see more tests that we ran? Check out this post.

What code do we use?

The geeks that have been following us will know that we’ve shared our code before here. But since then, we’ve updated it a bit to make sure that we can run tests even more efficiently, but also to help out the rest of the community.

Flickering Effect?

One of the biggest downsides of using Google Tag Manager for A/B Testing is that it still has a flickering effect on the pages that are loaded. That’s the biggest issue with running testing through the front-end. Other A/B testing vendors claim to have fixed this, but if you’re doing front-end testing, you will always have flickering and you should keep that in mind when coding your test.

One of the things we do to reduce the flickering effect is splitting up the GTM container snippet and moving the script part of the snippet all the way to the top of the head. That way GTM we will load earlier, which then loads the A/B test earlier. It will also load Google Analytics earlier, improving the reliability of your tracking. Wider Funnel has also written some good tips to reduce flickering.

➤ You can find the code to start testing through Google Tag Manager yourself here.

For the full technical details about the source code and the features that we’re using, I’d like to point you at the README.

Features

A/B/n + Multivariate Testing

The scripts that you will find in the Git repository currently support regular A/B testing, but also the use of multivariate testing to test multiple variants against each other. Very soon, we’ll be adding another script that can be used to implement X numbers of A/B tests into one script. We’ll post an update on Twitter as soon as this happens.

Google Analytics

All the data that we need for analyzing our testing can be found in Google Analytics. That’s why the script is sending the data for the tests through a custom dimension on session level. Why on a session level? We plus the script will make sure that a user can never be in more than one test at the same time and GA data will help us analyze it.

Advanced note: In our case, we set the custom dimension after the pageview is fired. This is an issue to most sites as the custom dimension won’t be attached to it and so it won’t make to Google Analytics. That is why you would normally need to send a next event so the custom dimension will be attached to it

What’s next?

Google?

Rumor is that Google is working on a competitor (Google Optimize?) to the well-known testing providers that might disrupt the industry. We can’t wait to see what it comes up with as the first signs we’ve seen look very promising.

Back-end Testing

As we’ve already mentioned, flickering is one of the biggest downsides in the way we do A/B testing, so back-end testing would really be a preferred option. It would also make integrations with our other datasets much easier if we had more of our testing structure on the back-end of our platforms. This is something we might start building towards the end of the year to support our long-term testing goals. We’re already talking to some companies who’ve embedded this completely into their testing processes.

You can and should help!

We’re hoping that you’re just as excited about testing as we are. That’s one of the main reasons we’ve open-sourced the code – maybe you can come up with new features or improvements and share them with all of us so the CRO industry can innovate even faster.

In the next blog post, I’ll talk more about the hacks we do in Google Search Console through our 850-plus properties.

If you missed the previous posts in this series, don’t forget to check them out: #1: Heat maps , #2: Deep dive on A/B testing and #3: Learnings from our A/B tests, #4: From Marketing Manager to Recruiter, #5 Running ScreamingFrog in the Cloud and #6 What tools do we use?, #7 We track everything! , #8 Google Tag Manager

This is a #TNWLife article, a look into the lives of those that work at The Next Web.

Get the TNW newsletter

Get the most important tech news in your inbox each week.