In 2019, the Santa Fe Independent School District in Texas ran a weeklong pilot program with the facial recognition firm AnyVision in its school hallways. With more than 5,000 student photos uploaded for the test run, AnyVision called the results “impressive” and expressed excitement at the results to school administrators.

“Overall, we had over 164,000 detections the last 7 days running the pilot. We were able to detect students on multiple cameras and even detected one student 1100 times!” Taylor May, then a regional sales manager for AnyVision, said in an email to the school’s administrators.

The number gives a rare glimpse into how often people can be identified through facial recognition, as the technology finds its way into more schools, stores, and public spaces like sports arenas and casinos.

May’s email was among hundreds of public records reviewed by The Markup of exchanges between the school district and AnyVision, a fast-growing facial recognition firm based in Israel that boasts hundreds of customers around the world, including schools, hospitals, casinos, sports stadiums, banks, and retail stores. One of those retail stores is Macy’s, which uses facial recognition to detect known shoplifters, according to Reuters. Facial recognition, purportedly AnyVision, is also being used by a supermarket chain in Spain to detect people with prior convictions or restraining orders and prevent them from entering 40 of its stores, according to research published by the European Network of Corporate Observatories.

Neither Macy’s nor supermarket chain Mercadona responded to requests for comment.

The public records The Markup reviewed included a 2019 user guide for AnyVision’s software called “Better Tomorrow.” The manual contains details on AnyVision’s tracking capabilities and provides insight on just how people can be identified and followed through its facial recognition.

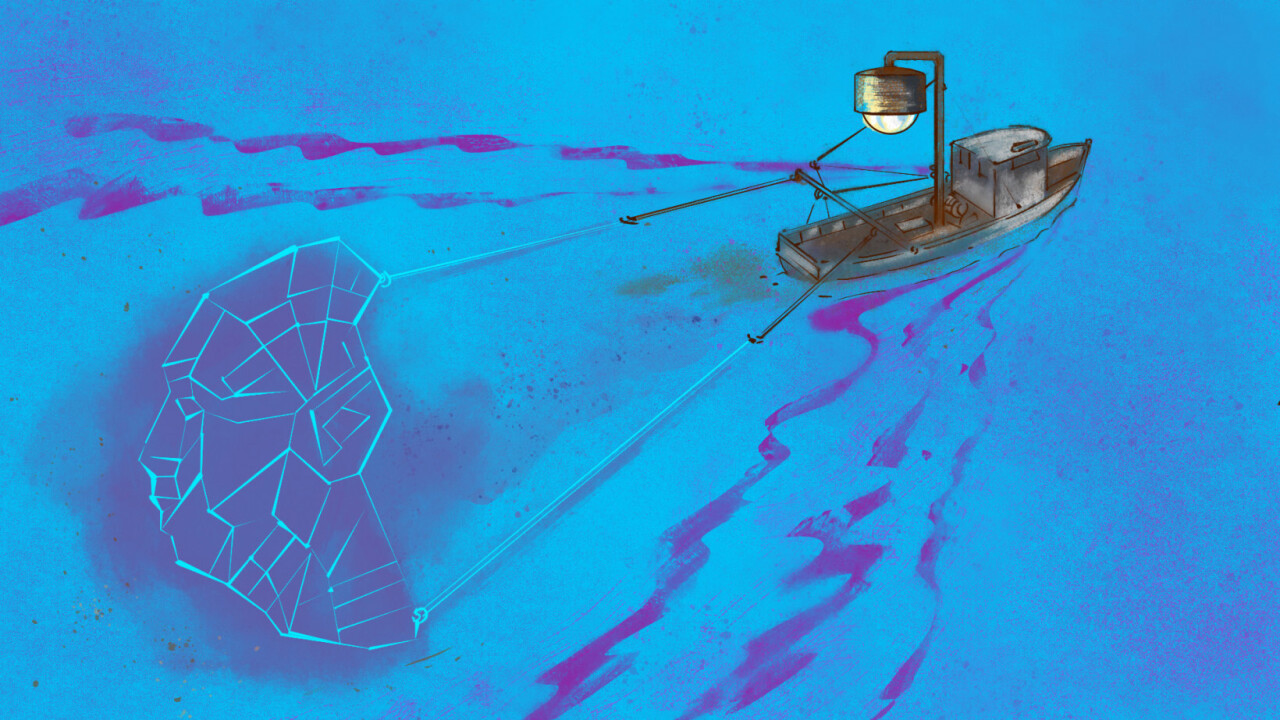

The growth of facial recognition has raised privacy and civil liberties concerns over the technology’s ability to constantly monitor people and track their movements. In June, the European Data Protection Board and the European Data Protection Supervisor called for a facial recognition ban in public spaces, warning that “deploying remote biometric identification in publicly accessible spaces means the end of anonymity in those places.”

Lawmakers, privacy advocates, and civil rights organizations have also pushed against facial recognition because of error rates that disproportionately hurt people of color. A 2018 research paper from Joy Buolamwini and Timnit Gebru highlighted how facial recognition technology from companies like Microsoft and IBM is consistently less accurate in identifying people of color and women.

In December 2019, the National Institute of Standards and Technology also found that the majority of facial recognition algorithms exhibit more false positives against people of color. There have been at least three cases of a wrongful arrest of a Black man based on facial recognition.

“Better Tomorrow” is marketed as a watchlist-based facial recognition program, where it only detects people who are a known concern. Stores can buy it to detect suspected shoplifters, while schools can upload sexual predator databases to their watchlists, for example.

But AnyVision’s user guide shows that its software is logging all faces that appear on camera, not just people of interest. For students, that can mean having their faces captured more than 1,000 times a week.

And they’re not just logged. Faces that are detected but aren’t on any watchlists are still analyzed by AnyVision’s algorithms, the manual noted. The algorithm groups faces it believes belong to the same person, which can be added to watchlists for the future.

AnyVision’s user guide said it keeps all records of detections for 30 days by default and allows customers to run reverse image searches against that database. That means that you can upload photos of a known person and figure out if they were caught on camera at any time during the last 30 days.

The software offers a “Privacy Mode” feature in which it ignores all faces not on a watchlist, while another feature called “GDPR Mode” blurs non-watchlist faces on video playback and downloads. The Santa Fe Independent School District didn’t respond to a request for comment, including on whether it enabled the Privacy Mode feature.

“We do not activate these modes by default but we do educate our customers about them,” AnyVision’s chief marketing officer, Dean Nicolls, said in an email. “Their decision to activate or not activate is largely based on their particular use case, industry, geography, and the prevailing privacy regulations.”

AnyVision boasted of its grouping feature in a “Use Cases” document for smart cities, stating that it was capable of collecting face images of all individuals who pass by the camera. It also said that this could be used to “track [a] suspect’s route throughout multiple cameras in the city.”

The Santa Fe Independent School District’s police department wanted to do just that in October 2019, according to public records.

In an email obtained through a public records request, the school district police department’s Sgt. Ruben Espinoza said officers were having trouble identifying a suspected drug dealer who was also a high school student. AnyVision’s May responded, “Let’s upload the screenshots of the students and do a search through our software for any matches for the last week.”

The school district originally purchased AnyVision after a mass shooting in 2018, with hopes that the technology would prevent another tragedy. By January 2020, the school district had uploaded 2,967 photos of students for AnyVision’s database.

James Grassmuck, a member of the school district’s board of trustees who supported using facial recognition, said he hasn’t heard any complaints about privacy or misidentifications since it’s been installed.

“They’re not using the information to go through and invade people’s privacy on a daily basis,” Grassmuck said. “It’s another layer in our security, and after what we’ve been through, we’ll take every layer of security we can get.”

The Santa Fe Independent School District’s neighbor, the Texas City Independent School District, also purchased AnyVision as a protective measure against school shootings. It has since been used in attempts to identify a kid who had been licking a neighborhood surveillance camera, to kick out an expelled student from his sister’s graduation, and to ban a woman from showing up on school grounds after an argument with the district’s head of security, according to WIRED.

“The mission creep issue is a real concern when you initially build out a system to find that one person who’s been suspended and is incredibly dangerous, and all of a sudden you’ve enrolled all student photos and can track them wherever they go,” Clare Garvie, a senior associate at the Georgetown University Law Center’s Center on Privacy & Technology, said. “You’ve built a system that’s essentially like putting an ankle monitor on all your kids.”

This article by Alfred Ng was originally published on The Markup and was republished under the Creative Commons Attribution-NonCommercial-NoDerivatives license.

Get the TNW newsletter

Get the most important tech news in your inbox each week.