One of the useful features of search engines like Google is the autocomplete function that enables users to find fast answers to their questions or queries. However, autocomplete search functions are based on ambiguous algorithms that have been widely criticized because they often provide biased and racist results.

The ambiguity of these algorithm stems from the fact that most of us know very little about them — which has led some to refer to them as “black boxes.” Search engines and social media platforms do not offer any meaningful insight or details on the nature of the algorithms they employ. As users, we have the right to know the criteria used to produce search results and how they are customized for individual users, including how people are labelled by Google’s search engine algorithms.

To do so, we can use a reverse engineering process, conducting multiple online searches on a specific platform to better understand the rules that are in place. For example, the hashtag #fentanyl can be presently searched and used on Twitter, but it is not allowed to be used on Instagram, indicating the kind of rules that are available on each platform.

Automated information

When searching for celebrities using Google, there is often a brief subtitle and thumbnail picture associated with the person that is automatically generated by Google.

Our recent research showed how Google’s search engine normalizes conspiracy theorists, hate figures and other controversial people by offering neutral and even sometimes positive subtitles. We used virtual private networks (VPNs) to conceal our locations and hide our browsing histories to ensure that search results were not based on our geographical location or search histories.

We found, for example, that Alex Jones, “the most prolific conspiracy theorist in contemporary America,” is defined as an “American radio host,” while David Icke, who is also known for spreading conspiracies, is described as a “former footballer.” These terms are considered by Google as the defining characteristics of these individuals and can mislead the public.

Dynamic descriptors

In the short time since our research was conducted in the fall of 2021, search results seem to have changed.

I found that some of the subtitles that we originally identified, have been either modified, removed or replaced. For example, the Norwegian terrorist Anders Breivik was subtitled “Convicted criminal,” yet now there is no label associated with him.

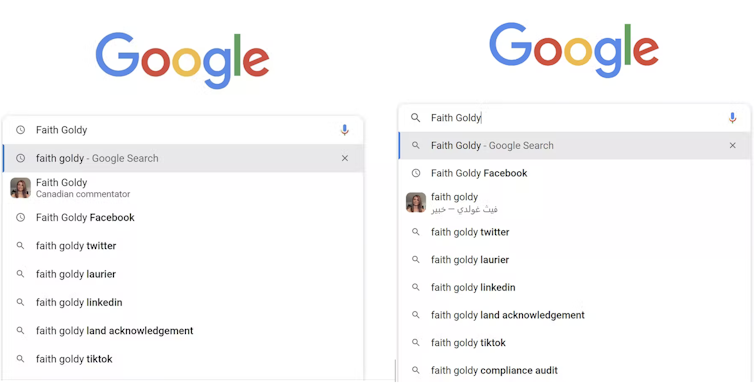

Faith Goldy, the far-right Canadian white nationalist who was banned from Facebook for spreading hate speech, did not have a subtitle. Now however, her new Google subtitle is “Canadian commentator.”

There is no indication of what a commentator suggests. The same observation is found in relation to American white supremacist Richard B. Spencer. Spencer did not have a label a few months ago, but is now an “American editor,” which certainly does not characterize his legacy.

Another change relates to Lauren Southern, a Canadian far-right member, who was labelled as a “Canadian activist,” a somewhat positive term, but is now described as a “Canadian author.”

The seemingly random subtitle changes show that the programming of the algorithmic black boxes is not static, but changes based on several indicators that are still unknown to us.

Searching in Arabic vs. English

A second important new finding from our research is related to the differences in the subtitle results based on the selected language search. I speak and read Arabic, so I changed the language setting and searched for the same figures to understand how they are described in Arabic.

To my surprise, I found several major differences between English and Arabic. Once again, there was nothing negative in describing some of the figures that I searched for. Alex Jones becomes a “TV presenter of talk shows,” and Lauren Southern is erroneously described as a “politician.”

And there’s much more from the Arabic language searches: Faith Goldy becomes an “expert,” David Icke transforms from a “former footballer” into an “author” and Jake Angeli, the “QAnon shaman” becomes an “actor” in Arabic and an “American activist” in English.

Richard B. Spencer becomes a “publisher” and Dan Bongino, a conspiracist permanently banned from YouTube, transforms from an “American radio host” in English to a “politician” in Arabic. Interestingly, the far-right figure, Tommy Robinson, is described as a “British-English political activist” in English but has no subtitle in Arabic.

Misleading labels

What we can infer from these language differences is that these descriptors are insufficient, because they condense one’s description to one or a few words that can be misleading.

Understanding how algorithms function is important, especially as misinformation and distrust are on the rise and as conspiracy theories are still spreading rapidly. We also need more insight into how Google and other search engines work — it is important to hold these companies accountable for their biased and ambiguous algorithms.![]()

This article by Ahmed Al-Rawi, Assistant Professor, News, Social Media, and Public Communication, Simon Fraser University, is republished from The Conversation under a Creative Commons license. Read the original article.

Get the TNW newsletter

Get the most important tech news in your inbox each week.