IBM today announced a new strategy for the implementation of several “error mitigation” techniques designed to bring about the era of fault-tolerant quantum computers.

Up front: Anyone still clinging to the notion that quantum circuits are too noisy for useful computing is about to be disillusioned.

A decade ago, the idea of a working quantum computing system seemed far-fetched to most of us. Today, researchers around the world connect to IBM’s cloud-based quantum systems with such frequency that, according to IBM’s director of quantum infrastructure, some three billion quantum circuits are completed every day.

IBM and other companies are already using quantum technology to do things that either couldn’t be done by classical binary computers or would take too much time or energy. But there’s still a lot of work to be done.

The dream is to create a useful, fault-tolerant quantum computer capable of demonstrating clear quantum advantage — the point where quantum processors are capable of doing things that classical ones simply cannot.

Background: Here at Neural, we identified quantum computing as the most important technology of 2022 and that’s unlikely to change as we continue the perennial march forward.

The short and long of it is that quantum computing promises to do away with our current computational limits. Rather than replacing the CPU or GPU, it’ll add the QPU (quantum processing unit) to our tool belt.

What this means is up to the individual use case. Most of us don’t need quantum computers because our day-to-day problems aren’t that difficult.

But, for industries such as banking, energy, and security, the existence of new technologies capable of solving problems more complex than today’s technology can represents a paradigm shift the likes of which we may not have seen since the advent of steam power.

If you can imagine a magical machine capable of increasing efficiency across numerous high-impact domains — it could save time, money, and energy at scales that could ultimately affect every human on Earth — then you can understand why IBM and others are so keen on building QPUs that demonstrate quantum advantage.

The problem: Building pieces of hardware capable of manipulating quantum mechanics as a method by which to perform a computation is, as you can imagine, very hard.

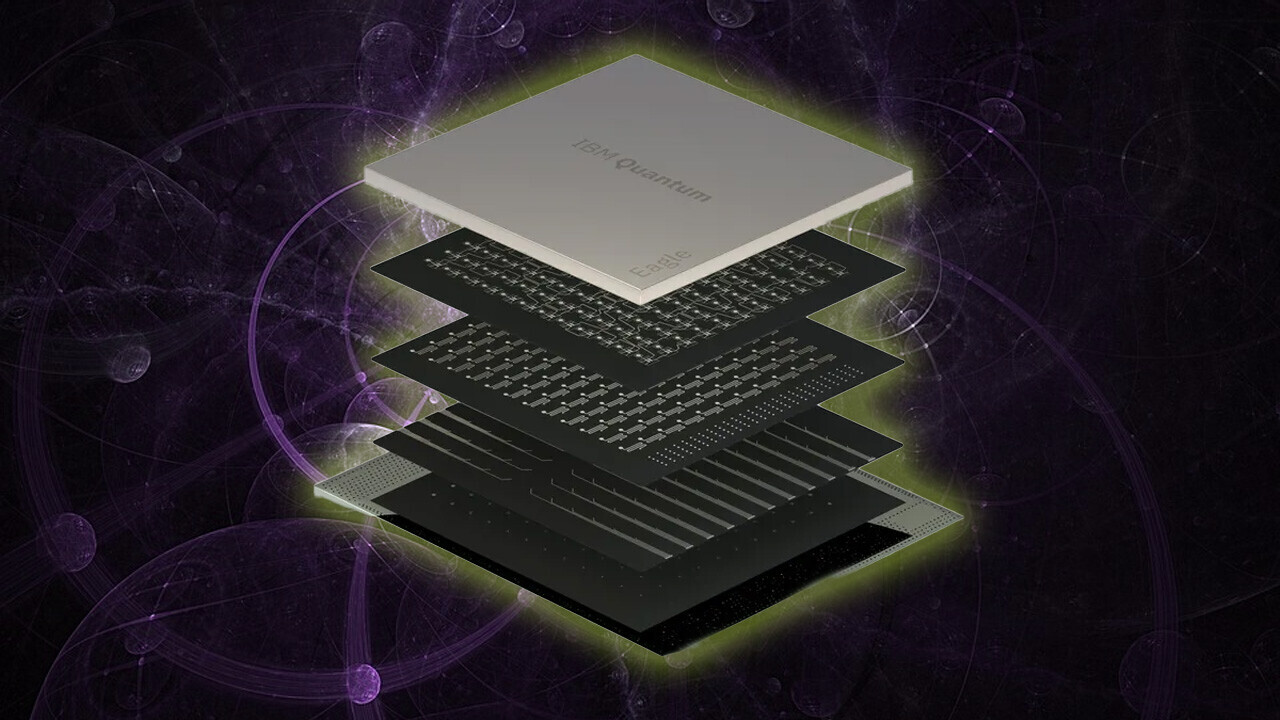

IBM’s spent the past decade or so figuring out how to solve the foundational problems plaguing the field — to include the basic infrastructure, cooling, and power source requirements necessary just to get started in the labs.

Today, IBM’s quantum roadmap shows just how far the industry has come:

But to get where it’s going, we need to solve one of the few remaining foundational problems related to the development of useful quantum processors: they’re noisy as heck.

The solution: Noisy qubits are the quantum computer engineer’s current bane. Essentially, the more processing power you try to squeeze out of a quantum computer the noisier its qubits get (qubits are essentially the computer bits of quantum computing).

Until now, the bulk of the work in squelching this noise has involved scaling qubits so that the signal the scientists are trying to read is strong enough to squeeze through.

In the experimental phases, solving noisy qubits was largely a game of Wack-a-mole. As scientists came up with new techniques — many of which were pioneered in IBM laboratories — they pipelined them to researchers for novel application.

But, these days, the field has advanced quite a bit. The art of error mitigation has evolved from targeted one-off solutions to a full suite of techniques.

Per IBM:

Current quantum hardware is subject to different sources of noise, the most well-known being qubit decoherence, individual gate errors, and measurement errors. These errors limit the depth of the quantum circuit that we can implement. However, even for shallow circuits, noise can lead to faulty estimates. Fortunately, quantum error mitigation provides a collection of tools and methods that allow us to evaluate accurate expectation values from noisy, shallow depth quantum circuits, even before the introduction of fault tolerance.

In recent years, we developed and implemented two general-purpose error mitigation methods, called zero noise extrapolation (ZNE) and probabilistic error cancellation (PEC).

Both techniques involve extremely complex applications of quantum mechanics, but they basically boil down to finding ways to eliminate or squelch the noise coming off quantum systems and/or to amplify the signal that scientists are trying to measure for quantum computations and other processes.

Neural’s take: We spoke to IBM’s director of quantum infrastructure, Jerry Chow, who seemed pretty excited about the new paradigm.

He explained that the techniques being touted in the new press release were already in production. IBM’s already demonstrated massive improvements in their ability to scale solutions, repeat cutting-edge results, and speed up classical processes using quantum hardware.

The bottom line is that quantum computers are here, and they work. Currently, it’s a bit hit or miss whether they can solve a specific problem better than classical systems, but the last remaining hard obstacle is fault-tolerance.

IBM’s new “error mitigation” strategy signals a change from the discovery phase of fault-tolerance solutions to implementation.

We tip our hats to the IBM quantum research team. Learn more here at IBM’s official blog.

Get the TNW newsletter

Get the most important tech news in your inbox each week.