Vivek Wadhwa and Mauritz Kop recently penned an op-ed urging governments around the world to get ahead of the threat posed by the emerging technology known as quantum computing. They even went so far as to title their article “Why Quantum Computing is Even More Dangerous Than Artificial Intelligence.”

Up front: This one gets a very respectful hard-disagree from me. While I do believe that quantum computing does pose an existential threat to humanity, my reasons differ wildly from those proposed by Wadhwa and Kop.

Point

Wadhwa and Kop open their article with a description of AI’s failures, potential misuse, and how the media’s narrative has exacerbated the danger of AI before it settles on a powerful lead:

The world’s failure to rein in the demon of AI—or rather, the crude technologies masquerading as such—should serve to be a profound warning. There is an even more powerful emerging technology with the potential to wreak havoc, especially if it is combined with AI: quantum computing. We urgently need to understand this technology’s potential impact, regulate it, and prevent it from getting into the wrong hands before it is too late. The world must not repeat the mistakes it made by refusing to regulate AI.

The duo’s article then goes on to describe the nature of quantum computers and the current state of research before settling on its next important point:

Given the potential scope and capabilities of quantum technology, it is absolutely crucial not to repeat the mistakes made with AI—where regulatory failure has given the world algorithmic bias that hypercharges human prejudices, social media that favors conspiracy theories, and attacks on the institutions of democracy fueled by AI-generated fake news and social media posts. The dangers lie in the machine’s ability to make decisions autonomously, with flaws in the computer code resulting in unanticipated, often detrimental, outcomes.

They also describe the problem with current encryption standards and the need for quantum-resistant technology and new standards to prevent corporate and national secrets from being exposed to the US’ adversaries:

Patents, trade secrets, and related intellectual property rights should be tightly secured—a return to the kind of technology control that was a major element of security policy during the Cold War. The revolutionary potential of quantum computing raises the risks associated with intellectual property theft by China and other countries to a new level.

Finally, the article ends with a call for common sense legislation:

Governments must urgently begin to think about regulations, standards, and responsible uses—and learn from the way countries handled or mishandled other revolutionary technologies, including AI, nanotechnology, biotechnology, semiconductors, and nuclear fission.

Counterpoint

I’ve written extensively about quantum computing. I believe it has the potential to be the most transformative technology in history. But the threat it poses is, in my opinion, more closely related to that of fusion than, say, a knife.

As Wadhwa and Kop point out, the utter failure by the US government to establish even a small modicum of human-centered regulations or policies concerning the misuse of AI has resulted in a development environment where bias is not only acceptable, it’s a given.

But no amount of government oversight and policy enforcement is going to change the fact that anyone with internet access and the will to succeed can create, train, and deploy models.

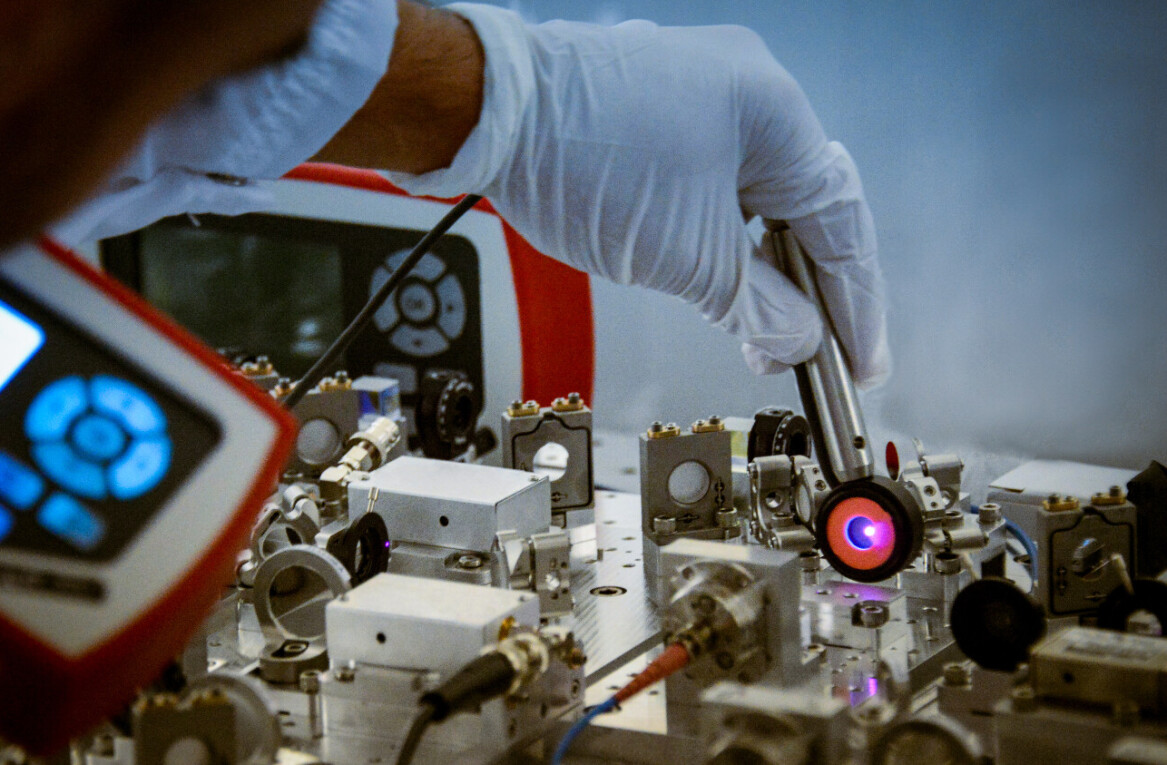

It’s a bit harder to build a functional quantum computer capable of performing adversarial decryption tasks.

Wadhwa and Kop are absolutely correct in their calls for some regulation — though I’m vehemently against the idea that the US, or any country, should in any way “return to the kind of technology control that was a major element of security policy during the Cold War” when it comes to quantum computing. Physics is not a trade or military secret.

Encryption, by its very nature, doesn’t require secrecy. And quantum computers, for all the hope they represent, only promise to speed things up. The world is already taking steps to mitigate the threat of quantum decryption.

The truth is that AI and quantum computing pose exactly as big a threat as humanity’s ability to use them for evil.

Right now, billions of humans are being actively manipulated by biased algorithms in every conceivable sector ranging from social media and employment seeking to healthcare and law enforcement.

As previously mentioned, anyone with internet access and the will to succeed can learn to build and deploy AI models.

It costs millions — billions even — to build a quantum computer. And the companies and labs building them already face a far stricter regulatory environment in the US than their machine learning-only counterparts.

As for the potential harm quantum computers might cause? Aside from the pair’s concerns about quantum encryption, it appears their major worry is that quantum computers will exacerbate the existing issues with inequity and algorithmic bias.

To that, I’d argue that quantum computers represent our biggest hope at breaking free from the “bullshit in, bullshit out” paradigm that deep learning has sucked the entire field of artificial intelligence into.

Instead of relying on wall-sized stacks of GPUs to brute-force inferences from pig-trough-sized buckets of unlabeled data and hoping prime rib and filet mignon will come out the other end, quantum computers could open up entire new computation methods for dealing with smaller batches of data more efficaciously.

Summation

I believe the “threat” of AI could have been somewhat mitigated with stricter regulation (it’s never too late for the US and other actors to follow the EU’s lead). But the accessibility of AI technology makes it a clear and present threat to every single human on Earth.

Quantum computing technology is poised to help us solve many of the problems created by the “scale is all you need” crowd.

In the end, I think it’s about putting the right technology in the right hands at the right time. Artificial intelligence is a knife, it’s a tool that can be used for good or evil by just about anyone. Quantum computing is like fusion, it could potentially cause massive harm at unprecedented scale, but the cost of entry is high enough to bar the vast majority of people on the planet from accessing it.

Historically speaking, it doesn’t make sense to condemn fusion or quantum computing based on the hypothetical things that could go wrong — where would we be without nuclear energy? The call for greater oversight and common sense regulation is necessary, but the conflation with AI technology seems unwarranted.

AI is clearly dangerous, and that’s where I think regulatory bodies, the media, and the general public should focus their concerns.

Get the TNW newsletter

Get the most important tech news in your inbox each week.