Sometimes, the smoking gun in a sexual child abuse case can be something totally unremarkable — a plastic bag in the corner, or a piece of rug on the floor.

Dutchman Leon D. was exposed by a church window. In 2011, he was convicted for molesting two young boys and producing and distributing imagery.

Leon D.’s pornographic pictures showed a church window across the street, visible through the window in the back of the room. Together with Dutch police, researchers wrote an algorithm that made use of Google Earth images to find that exact same church window. It located the church in a small town, Den Bommel. Leon D.’s home was right across the street.

Another infamous and large-scale case of sexual child abuse — daycare employee Robert M. molested 83 young children — was solved with the help of various research projects at the University of Amsterdam led by Marcel Worring. He’s the director of the Informatics Institute at the University of Amsterdam and professor in data science for business analytics.

Beds and teddy bears

Worring and his team developed an algorithm that can recognize objects commonly used in sexual imagery of children. “Things like beds or teddy bears, for example,” he tells me. “And obviously, children.”

It’s a system similar to what Google Photos uses to categorize your cat pictures, with the big difference that Google has a never-ending collection of cat pictures to feed the algorithm.

Teaching a computer what sexual child abuse looks like would be easier with large image datasets, but in most cases, sexual imagery cannot be shared with researchers for obvious privacy reasons.

A spin-off company from the research group continued developing the algorithm and checking the material, after its employees were screened and legally allowed to see it.

The ambiguity of what counts as abuse poses another challenge. “When a pornographic video shows a young child, the system has no problem labeling it correctly,” says Worring. “But with 17-year-olds, most human experts can’t even tell the difference.”

Another algorithm by researchers at the University of Amsterdam translates images into text. “Textual descriptions are more specific because they also convey the relationship between objects,” adds Worring. The sentence “the child is on the bed” allows for a more narrow search than a visual tool looking for images with children and beds.

Tech giants doing their own thing

Visual image analysis is just one instrument in the abuse detection toolbox. While law enforcement organizations worldwide are working on software to identify abusive content, tech giants like Google, IBM, and Facebook are building tools to do the same on their own platforms. Often, these tools are openly available to other parties.

There’s PhotoDNA, created by Microsoft, which generates a signature (or hash) for each image, video, or audio file that can then be compared with other content to see if there’s a match. And more recently, Google launched an AI-based tool that not only compares new material against these known hashes but also identifies illegal material that hasn’t been flagged in the past.

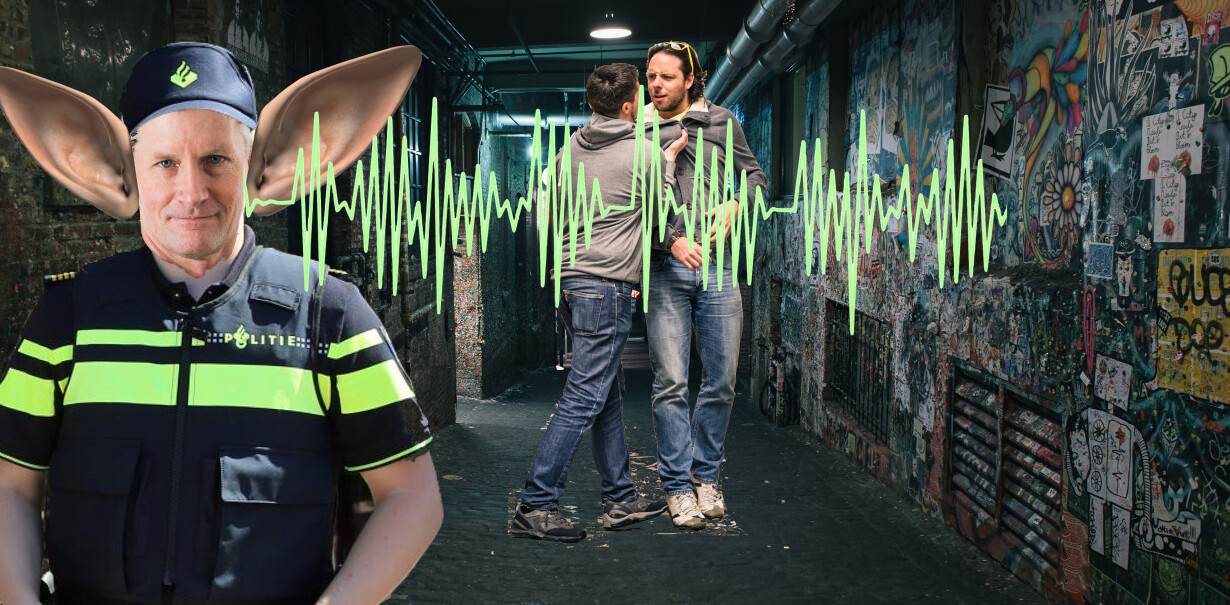

Both technologies are available to law enforcement organizations, but using them is rather problematic, says Peter Duin, a researcher for the Dutch police.

“We are talking about extremely privacy-sensitive information here — we can’t just upload those pictures to Google Cloud. And even if their software is completely secure, we would still be sharing data with third parties. What if someone working for Google decides to make a copy?”

Sometimes the end will justify the means, adds Duin. “If we’re sure we can solve a case by using one of Google’s products, we would probably make an exception.”

Up to a million files

New technologies and better cooperation with foreign organizations made it easier for the Dutch police to track down sexual child abuse. In 2017, 130 Dutch victims could be identified.

Unfortunately, the number of reported cases is also increasing drastically. In 2012, the police unit dedicated to “child pornography” and “child sex tourism” (known as TBKK) received 2,000 reports of (supposedly) Dutch users uploading and downloading sexual abusive imagery. This number increased to 12,000 in 2016 and, this year, it will probably reach 30,000.

“The internet has completely changed the playing field,” says Duin. “Doing this job 15 years ago, we were stunned every time we came across a van crammed with porn videos and DVDs — how could any person own so much child pornography? These days, we seize hard drives with up to a million files.”

In that sense, technology has been a double-edged sword in the battle against sexual imagery of children. And despite its abundance on the web, TBKK still employs the same number of officers — about 150 people. There’s no budget available to expand the team. “So the sad truth is we see more than we used to, but lack the capacity to follow up on all of these cases,” says Duin.

Combining data

In order to pick up the slack, new technologies like abuse-detecting algorithms will need to become even better. Worring predicts future tools will be able to do so by combining different data. “So instead of just analyzing images, it can also take into account who posted the material, when this happened, and who it was shared with,” he says.

The way human experts interact with these algorithms will change as well, he adds. “Right now, the algorithm spits out results and a detective checks if these images really depict abuse. But their feedback isn’t shared with the algorithm, so it doesn’t learn anything. In the future, the process will be a two-way street where humans and computers help each other improve.”

It’s a striking thought: While AI is threatening jobs everywhere, detecting child abuse is something we’d love to fully outsource to robots.

Worring isn’t sure that will ever be possible. “But we can make the system more accurate to minimize exposure,” he adds. “So instead of needing three days to go through all the flagged files, the police may only need a few hours — or even minutes.”

Now that much crime has gone digital, the Dutch police need tech talent more than ever. Check out the various tech jobs they have to offer.

Get the TNW newsletter

Get the most important tech news in your inbox each week.