A researcher at Wageningen University & Research recently published a pre-print article detailing a system by which facial recognition AI could be used to identify and measure the emotional state of farm animals. If you’re imagining a machine that tells you if your pigs are joyous or your cows are grumpy… you’re spot on.

Up front: There’s little evidence to believe that so-called ’emotion recognition’ systems actually work. In the sense that humans and other creatures can often accurately recognize (as in: guess) other people’s emotions, an AI can be trained on a human-labeled data set to recognize emotion with similar accuracy to humans.

However, there’s no ground-truth when it comes to human emotion. Everyone experiences and interprets emotions differently and how we express emotion on our faces can vary wildly based on cultural and unique biological features.

In short: The same ‘science’ driving systems that claim to be able to tell if someone is gay through facial recognition or if a person is likely to be aggressive, is behind emotion recognition for people and farm animals.

Basically, nobody can tell if another person is gay, or aggressive just by looking at their face. You can guess. And you might be right. But no matter how many times you’re right, it’s always a guess and you’re always operating on your personal definitions.

That’s how emotion recognition works too. What you might interpret as “upset,” might just be someone’s normal expression. What you might see as “gay,” well.. I defy anyone to define internal gayism (ie: do thoughts or actions make you recognizably gay?).

It’s impossible to “train” a computer to recognize emotions because computers don’t think. They rely on data sets labeled by humans. Humans make mistakes. Worse, it’s ridiculous to imagine any two humans would look at a million faces and come to a blind consensus on the emotional state of each person viewed.

Researchers don’t train AI to recognize emotion or make inferences from faces. They train AI to imitate the perceptions of the specific humans who labeled the data they’re using.

That being said: Creating an emotion recognition engine for animals isn’t necessarily a bad thing.

Here’s a bit from the researcher’s paper:

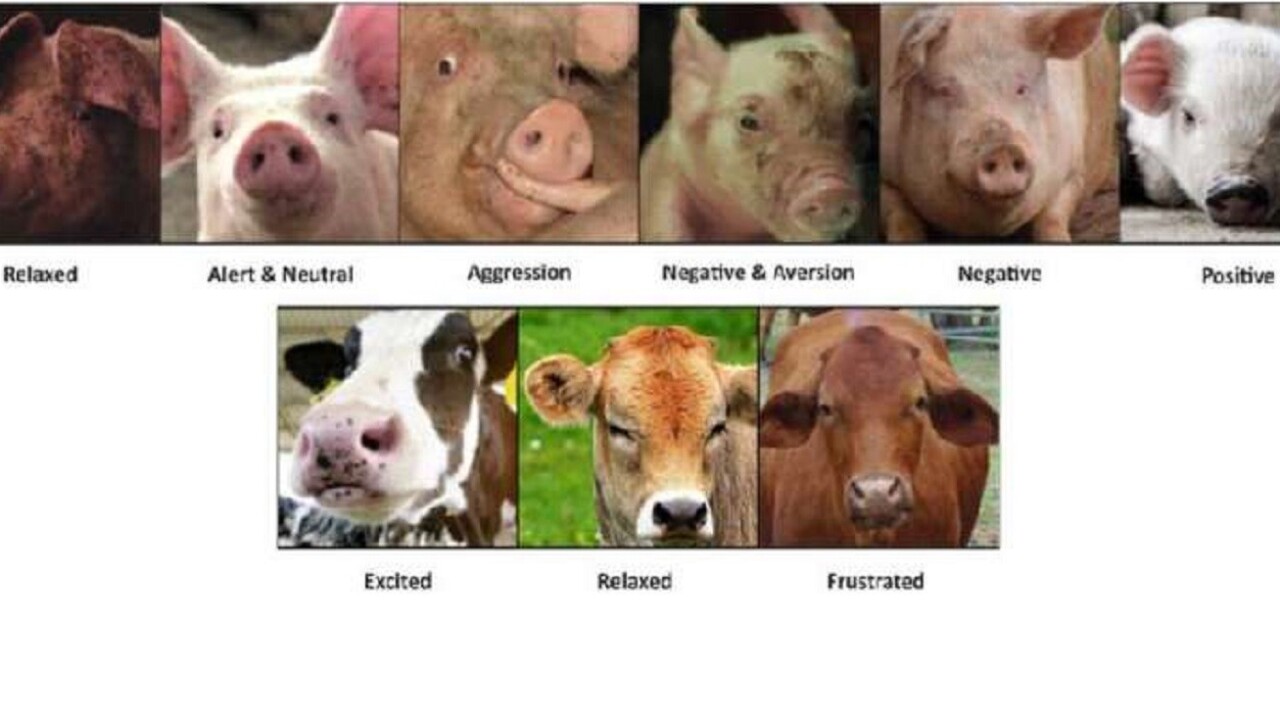

The system is trained on dataset of facial features of images of the farm animals collected in over 6 farms and has been optimized to operate with an average accuracy of 85%. From these, we infer the emotional states of animals in real time. The software detects 13 facial actions and 9 emotional states, including whether the animal is aggressive, calm, or neutral.

The paper goes on to describe the system as a high-value, low-impact machine learning paradigm where farmers can gauge livestock comfort in real-time using cameras instead of invasive procedures such as hormone sampling.

We covered something similar in the agricultural world a while back. Basically, farmers operating orchards can use image recognition AI to determine if any of their trees are sickly. When you have 10s of thousands of trees, performing a visual inspection on each one of them in a timely manner is impossible for humans. But AI can stare at trees all day and night.

AI for livestock monitoring is a different beast altogether. Instead of recognizing specifically-defined signs of disease in relatively-motionless trees, the researcher’s attempting to tell what mood a bunch of animals are in.

Does it work? According to the researcher, yes. But according to the research: kinda. The paper makes claims of incredibly high accuracy, but that’s when compared against human spotters.

So here’s the thing: Creating an AI that can tell what pigs and cows are thinking almost as accurately as the world’s leading human experts is a lot like creating a food so delicious it impresses a chef. Maybe the next chef doesn’t like it, maybe nobody but that chef likes it.

The point is: this system uses AI to do a slightly poorer job than a farmer can at determining what a cow is thinking by looking at it. There’s value in that, because farmers can’t stare at cows all day and night waiting for one of them to grimace in pain.

Here’s why this is fine: Because there’s a slight potential that the animals could be treated a tiny bit better. While it’s impossible to tell exactly what an animal is feeling, the AI can certainly recognize signs of distress, discomfort, or pain well enough to make it worth while to employ this system in places where farmers could and would intervene if they thought their animals were in discomfort.

Unfortunately, the main reason why this matters is because livestock that lives in relative comfort tends to produce more.

It’s a nice fantasy to imagine a small, farm-to-table, family installing cameras all over their massive free-range livestock facility. But, more likely, systems like this will help corporate farmers find the sweet spot between packing animals in and keeping their stress levels just low enough to produce.

Final thoughts: It’s impossible to predict what the real-world use cases for this will be, and there are definitely some strong ones. But it muddies the water when researchers compare a system that monitors livestock to an emotion recognition system for humans.

Whether a cow gets a little bit of comfort before it’s slaughtered or as it spends the entirety of its life connected to dairy machinery isn’t the same class of problem as dealing with emotion recognition for humans.

Consider the fact that, for example, emotion recognition systems tend to classify Black men’s faces as angrier than white men’s. Or women, typically, rate pain higher when observing its perceived existence in people and animals. Which bias do we train the AI with?

Because, based on the current state of the technology, you can’t train an AI without bias unless the data you’re generating is never touched by human hands, and even then you’re creating a separate bias category.

You can read the whole paper here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.